Quotes

i. “‘Intuition’ comes first. Reasoning comes second.” (Llewelyn & Doorn, Clinical Psychology: A Very Short Introduction, Oxford University Press)

ii. “We tend to cope with difficulties in ways that are familiar to us — acting in ways that were helpful to us in the past, even if these ways are now ineffective or destructive.” (-ll-)

iii. “We all thrive when given attention, and being encouraged and praised is more effective at changing our behaviour than being punished. The best way to increase the frequency of a behaviour is to reward it.” (-ll-)

iv. “You can’t make people change if they don’t want to, but you can support and encourage them to make changes.” (-ll-)

v. “You shall know a word by the company it keeps” (John Rupert Firth, as quoted in Thierry Poibeau’s Machine Translation, MIT Press).

vi. “The basic narrative of sedentism and agriculture has long survived the mythology that originally supplied its charter. From Thomas Hobbes to John Locke to Giambattista Vico to Lewis Henry Morgan to Friedrich Engels to Herbert Spencer to Oswald Spengler to social Darwinist accounts of social evolution in general, the sequence of progress from hunting and gathering to nomadism to agriculture (and from band to village to town to city) was settled doctrine. Such views nearly mimicked Julius Caesar’s evolutionary scheme from households to kindreds to tribes to peoples to the state (a people living under laws), wherein Rome was the apex […]. Though they vary in details, such accounts record the march of civilization conveyed by most pedagogical routines and imprinted on the brains of schoolgirls and schoolboys throughout the world. The move from one mode of subsistence to the next is seen as sharp and definitive. No one, once shown the techniques of agriculture, would dream of remaining a nomad or forager. Each step is presumed to represent an epoch-making leap in mankind’s well-being: more leisure, better nutrition, longer life expectancy, and, at long last, a settled life that promoted the household arts and the development of civilization. Dislodging this narrative from the world’s imagination is well nigh impossible; the twelve-step recovery program required to accomplish that beggars the imagination. I nevertheless make a small start here. It turns out that the greater part of what we might call the standard narrative has had to be abandoned once confronted with accumulating archaeological evidence.” (James C. Scott, Against the Grain, Yale University Press)

vii. “Thanks to hominids, much of the world’s flora and fauna consist of fire-adapted species (pyrophytes) that have been encouraged by burning. The effects of anthropogenic fire are so massive that they might be judged, in an evenhanded account of the human impact on the natural world, to overwhelm crop and livestock domestications.” (-ll-)

viii. “Most discussions of plant domestication and permanent settlement […] assume without further ado that early peoples could not wait to settle down in one spot. Such an assumption is an unwarranted reading back from the standard discourses of agrarian states stigmatizing mobile populations as primitive. […] Nor should the terms “pastoralist,” “agriculturalist,” “hunter,” or “forager,” at least in their essentialist meanings, be taken for granted. They are better understood as defining a spectrum of subsistence activities, not separate peoples […] A family or village whose crops had failed might turn wholly or in part to herding; pastoralists who had lost their flocks might turn to planting. Whole areas during a drought or wetter period might radically shift their subsistence strategy. To treat those engaged in these different activities as essentially different peoples inhabiting different life worlds is again to read back the much later stigmatization of pastoralists by agrarian states to an era where it makes no sense.” (-ll-)

ix. “Neither holy, nor Roman, nor an empire” (Voltaire, on the Holy Roman Empire, as quoted in Joachim Whaley’s The Holy Roman Empire: A Very Short Introduction, Oxford University Press)

x. “We don’t outgrow difficult conversations or get promoted past them. The best workplaces and most effective organizations have them. The family down the street that everyone thinks is perfect has them. Loving couples and lifelong friends have them. In fact, we can make a reasonable argument that engaging (well) in difficult conversations is a sign of health in a relationship. Relationships that deal productively with the inevitable stresses of life are more durable; people who are willing and able to “stick through the hard parts” emerge with a stronger sense of trust in each other and the relationship, because now they have a track record of having worked through something hard and seen that the relationship survived.” (Stone et al., Difficult Conversations, Penguin Publishing Group)

xi. “[D]ifficult conversations are almost never about getting the facts right. They are about conflicting perceptions, interpretations, and values. […] They are not about what is true, they are about what is important. […] Interpretations and judgments are important to explore. In contrast, the quest to determine who is right and who is wrong is a dead end. […] When competent, sensible people do something stupid, the smartest move is to try to figure out, first, what kept them from seeing it coming and, second, how to prevent the problem from happening again. Talking about blame distracts us from exploring why things went wrong and how we might correct them going forward.” (-ll-)

xii. “[W]e each have different stories about what is going on in the world. […] In the normal course of things, we don’t notice the ways in which our story of the world is different from other people’s. But difficult conversations arise at precisely those points where important parts of our story collide with another person’s story. We assume the collision is because of how the other person is; they assume it’s because of how we are. But really the collision is a result of our stories simply being different, with neither of us realizing it. […] To get anywhere in a disagreement, we need to understand the other person’s story well enough to see how their conclusions make sense within it. And we need to help them understand the story in which our conclusions make sense. Understanding each other’s stories from the inside won’t necessarily “solve” the problem, but […] it’s an essential first step.” (-ll-)

xiii. “I am really nervous about the word “deserve”. In some cosmic sense nobody “deserves” anything – try to tell the universe you don’t deserve to grow old and die, then watch it laugh at [you] as you die anyway.” (Scott Alexander)

xiv. “How we spend our days is, of course, how we spend our lives.” (Annie Dillard)

xv. “If you do not change direction, you may end up where you are heading.” (Lao Tzu)

xvi. “The smart way to keep people passive and obedient is to strictly limit the spectrum of acceptable opinion, but allow very lively debate within that spectrum.” (Chomsky)

xvii. “If we don’t believe in free expression for people we despise, we don’t believe in it at all.” (-ll-)

xviii. “I weigh the man, not his title; ’tis not the king’s stamp can make the metal better.” (William Wycherley)

xix. “Money is the fruit of evil as often as the root of it.” (Henry Fielding)

xx. “To whom nothing is given, of him can nothing be required.” (-ll-)

Algorithms to live by…

“…algorithms are not confined to mathematics alone. When you cook bread from a recipe, you’re following an algorithm. When you knit a sweater from a pattern, you’re following an algorithm. When you put a sharp edge on a piece of flint by executing a precise sequence of strikes with the end of an antler—a key step in making fine stone tools—you’re following an algorithm. Algorithms have been a part of human technology ever since the Stone Age.

* * *

In this book, we explore the idea of human algorithm design—searching for better solutions to the challenges people encounter every day. Applying the lens of computer science to everyday life has consequences at many scales. Most immediately, it offers us practical, concrete suggestions for how to solve specific problems. Optimal stopping tells us when to look and when to leap. The explore/exploit tradeoff tells us how to find the balance between trying new things and enjoying our favorites. Sorting theory tells us how (and whether) to arrange our offices. Caching theory tells us how to fill our closets. Scheduling theory tells us how to fill our time. At the next level, computer science gives us a vocabulary for understanding the deeper principles at play in each of these domains. As Carl Sagan put it, “Science is a way of thinking much more than it is a body of knowledge.” Even in cases where life is too messy for us to expect a strict numerical analysis or a ready answer, using intuitions and concepts honed on the simpler forms of these problems offers us a way to understand the key issues and make progress. […] tackling real-world tasks requires being comfortable with chance, trading off time with accuracy, and using approximations.”

…

I recall Zach Weinersmith recommending the book, and I seem to recall him mentioning when he did so that he’d put off reading it ‘because it sounded like a self-help book’ (paraphrasing). I’m not actually sure how to categorize it but I do know that I really enjoyed it; I gave it five stars on goodreads and added it to my list of favourite books.

The book covers a variety of decision problems and tradeoffs which people face in their every day lives, as well as strategies for how to approach such problems and identify good solutions (if they exist). The explore/exploit tradeoff so often implicitly present (e.g.: ‘when to look for a new restaurant, vs. picking one you are already familiar with’, or perhaps: ‘when to spend time with friends you already know, vs. spending time trying to find new (/better?) friends?’), optimal stopping rules (‘at which point do you stop looking for a romantic partner and decide that ‘this one is the one’?’ – this is perhaps a well-known problem with a well-known solution, but had you considered that you might use the same analytical framework for questions such as: ‘when to stop looking for a better parking spot and just accept that this one is probably the best one you’ll be able to find?’?), sorting problems (good and bad ways of sorting, why sort, when is sorting even necessary/required?, etc.), scheduling theory (how to handle task management in a good way, so that you optimize over a given constraint set – some examples from this part are included in the quotes below), satisficing vs optimizing (heuristics, ‘when less is more’, etc.), etc. The book is mainly a computer science book, but it is also to some extent an implicitly interdisciplinary work covering material from a variety of other areas such as statistics, game theory, behavioral economics and psychology. There is a major focus throughout on providing insights which are actionable and can actually be used by the reader, e.g. through the translation of identified solutions to heuristics which might be applied in every day life. The book is more pop-science-like than any book I’d have liked to read 10 years ago, and there are too many personal anecdotes for my taste included, but in some sense this never felt like a major issue while I was reading; a lot of interesting ideas and topics are covered, and the amount of fluff is within acceptable limits – a related point is also that the ‘fluff’ is also part of what makes the book relevant, because the authors really focus on tradeoffs and problems which really are highly relevant to some potentially key aspects of most people’s lives, including their own.

Below I have added some sample quotes from the book. If you like the quotes you’ll like the book, it’s full of this kind of stuff. I definitely recommend it to anyone remotely interested in decision theory and related topics.

…

“…one of the deepest truths of machine learning is that, in fact, it’s not always better to use a more complex model, one that takes a greater number of factors into account. And the issue is not just that the extra factors might offer diminishing returns—performing better than a simpler model, but not enough to justify the added complexity. Rather, they might make our predictions dramatically worse. […] overfitting poses a danger every time we’re dealing with noise or mismeasurement – and we almost always are. […] Many prediction algorithms […] start out by searching for the single most important factor rather than jumping to a multi-factor model. Only after finding that first factor do they look for the next most important factor to add to the model, then the next, and so on. Their models can therefore be kept from becoming overly complex simply by stopping the process short, before overfitting has had a chance to creep in. […] This kind of setup — where more time means more complexity — characterizes a lot of human endeavors. Giving yourself more time to decide about something does not necessarily mean that you’ll make a better decision. But it does guarantee that you’ll end up considering more factors, more hypotheticals, more pros and cons, and thus risk overfitting. […] The effectiveness of regularization in all kinds of machine-learning tasks suggests that we can make better decisions by deliberately thinking and doing less. If the factors we come up with first are likely to be the most important ones, then beyond a certain point thinking more about a problem is not only going to be a waste of time and effort — it will lead us to worse solutions. […] sometimes it’s not a matter of choosing between being rational and going with our first instinct. Going with our first instinct can be the rational solution. The more complex, unstable, and uncertain the decision, the more rational an approach that is.” (…for more on these topics I recommend Gigerenzer)

“If you’re concerned with minimizing maximum lateness, then the best strategy is to start with the task due soonest and work your way toward the task due last. This strategy, known as Earliest Due Date, is fairly intuitive. […] Sometimes due dates aren’t our primary concern and we just want to get stuff done: as much stuff, as quickly as possible. It turns out that translating this seemingly simple desire into an explicit scheduling metric is harder than it sounds. One approach is to take an outsider’s perspective. We’ve noted that in single-machine scheduling, nothing we do can change how long it will take us to finish all of our tasks — but if each task, for instance, represents a waiting client, then there is a way to take up as little of their collective time as possible. Imagine starting on Monday morning with a four-day project and a one-day project on your agenda. If you deliver the bigger project on Thursday afternoon (4 days elapsed) and then the small one on Friday afternoon (5 days elapsed), the clients will have waited a total of 4 + 5 = 9 days. If you reverse the order, however, you can finish the small project on Monday and the big one on Friday, with the clients waiting a total of only 1 + 5 = 6 days. It’s a full workweek for you either way, but now you’ve saved your clients three days of their combined time. Scheduling theorists call this metric the “sum of completion times.” Minimizing the sum of completion times leads to a very simple optimal algorithm called Shortest Processing Time: always do the quickest task you can. Even if you don’t have impatient clients hanging on every job, Shortest Processing Time gets things done.”

“Of course, not all unfinished business is created equal. […] In scheduling, this difference of importance is captured in a variable known as weight. […] The optimal strategy for [minimizing weighted completion time] is a simple modification of Shortest Processing Time: divide the weight of each task by how long it will take to finish, and then work in order from the highest resulting importance-per-unit-time [..] to the lowest. […] this strategy … offers a nice rule of thumb: only prioritize a task that takes twice as long if it’s twice as important.”

“So far we have considered only factors that make scheduling harder. But there is one twist that can make it easier: being able to stop one task partway through and switch to another. This property, “preemption,” turns out to change the game dramatically. Minimizing maximum lateness … or the sum of completion times … both cross the line into intractability if some tasks can’t be started until a particular time. But they return to having efficient solutions once preemption is allowed. In both cases, the classic strategies — Earliest Due Date and Shortest Processing Time, respectively — remain the best, with a fairly straightforward modification. When a task’s starting time comes, compare that task to the one currently under way. If you’re working by Earliest Due Date and the new task is due even sooner than the current one, switch gears; otherwise stay the course. Likewise, if you’re working by Shortest Processing Time, and the new task can be finished faster than the current one, pause to take care of it first; otherwise, continue with what you were doing.”

“…even if you don’t know when tasks will begin, Earliest Due Date and Shortest Processing Time are still optimal strategies, able to guarantee you (on average) the best possible performance in the face of uncertainty. If assignments get tossed on your desk at unpredictable moments, the optimal strategy for minimizing maximum lateness is still the preemptive version of Earliest Due Date—switching to the job that just came up if it’s due sooner than the one you’re currently doing, and otherwise ignoring it. Similarly, the preemptive version of Shortest Processing Time—compare the time left to finish the current task to the time it would take to complete the new one—is still optimal for minimizing the sum of completion times. In fact, the weighted version of Shortest Processing Time is a pretty good candidate for best general-purpose scheduling strategy in the face of uncertainty. It offers a simple prescription for time management: each time a new piece of work comes in, divide its importance by the amount of time it will take to complete. If that figure is higher than for the task you’re currently doing, switch to the new one; otherwise stick with the current task. This algorithm is the closest thing that scheduling theory has to a skeleton key or Swiss Army knife, the optimal strategy not just for one flavor of problem but for many. Under certain assumptions it minimizes not just the sum of weighted completion times, as we might expect, but also the sum of the weights of the late jobs and the sum of the weighted lateness of those jobs.”

“…preemption isn’t free. Every time you switch tasks, you pay a price, known in computer science as a context switch. When a computer processor shifts its attention away from a given program, there’s always a certain amount of necessary overhead. […] It’s metawork. Every context switch is wasted time. Humans clearly have context-switching costs too. […] Part of what makes real-time scheduling so complex and interesting is that it is fundamentally a negotiation between two principles that aren’t fully compatible. These two principles are called responsiveness and throughput: how quickly you can respond to things, and how much you can get done overall. […] Establishing a minimum amount of time to spend on any one task helps to prevent a commitment to responsiveness from obliterating throughput […] The moral is that you should try to stay on a single task as long as possible without decreasing your responsiveness below the minimum acceptable limit. Decide how responsive you need to be — and then, if you want to get things done, be no more responsive than that. If you find yourself doing a lot of context switching because you’re tackling a heterogeneous collection of short tasks, you can also employ another idea from computer science: “interrupt coalescing.” If you have five credit card bills, for instance, don’t pay them as they arrive; take care of them all in one go when the fifth bill comes.”

Links and random stuff

i. Pulmonary Aspects of Exercise and Sports.

“Although the lungs are a critical component of exercise performance, their response to exercise and other environmental stresses is often overlooked when evaluating pulmonary performance during high workloads. Exercise can produce capillary leakage, particularly when left atrial pressure increases related to left ventricular (LV) systolic or diastolic failure. Diastolic LV dysfunction that results in elevated left atrial pressure during exercise is particularly likely to result in pulmonary edema and capillary hemorrhage. Data from race horses, endurance athletes, and triathletes support the concept that the lungs can react to exercise and immersion stress with pulmonary edema and pulmonary hemorrhage. Immersion in water by swimmers and divers can also increase stress on pulmonary capillaries and result in pulmonary edema.”

“Zavorsksy et al.11 studied individuals under several different workloads and performed lung imaging to document the presence or absence of lung edema. Radiographic image readers were blinded to the exposures and reported visual evidence of lung fluid. In individuals undergoing a diagnostic graded exercise test, no evidence of lung edema was noted. However, 15% of individuals who ran on a treadmill at 70% of maximum capacity for 2 hours demonstrated evidence of pulmonary edema, as did 65% of those who ran at maximum capacity for 7 minutes. Similar findings were noted in female athletes.12 Pingitore et al. examined 48 athletes before and after completing an iron man triathlon. They used ultrasound to detect lung edema and reported the incidence of ultrasound lung comets.13 None of the athletes had evidence of lung edema before the event, while 75% showed evidence of pulmonary edema immediately post-race, and 42% had persistent findings of pulmonary edema 12 hours post-race. Their data and several case reports14–16 have demonstrated that extreme exercise can result in pulmonary edema”

“Conclusions

Sports and recreational participation can result in lung injury caused by high pulmonary pressures and increased blood volume that raises intracapillary pressure and results in capillary rupture with subsequent pulmonary edema and hemorrhage. High-intensity exercise can result in accumulation of pulmonary fluid and evidence of pulmonary edema. Competitive swimming can result in both pulmonary edema related to fluid shifts into the thorax from immersion and elevated LV end diastolic pressure related to diastolic dysfunction, particularly in the presence of high-intensity exercise. […] The most important approach to many of these disorders is prevention. […] Prevention strategies include avoiding extreme exercise, avoiding over hydration, and assuring that inspiratory resistance is minimized.”

…

ii. Some interesting thoughts on journalism and journalists from a recent SSC Open Thread by user ‘Well’ (quotes from multiple comments). His/her thoughts seem to line up well with my own views on these topics, and one of the reasons why I don’t follow the news is that my own answer to the first question posed below is quite briefly that, ‘…well, I don’t’:

“I think a more fundamental problem is the irrational expectation that newsmedia are supposed to be a reliable source of information in the first place. Why do we grant them this make-believe power?

The English and Acting majors who got together to put on the shows in which they pose as disinterested arbiters of truth use lots of smoke and mirror techniques to appear authoritative: they open their programs with regal fanfare, they wear fancy suits, they make sure to talk or write in a way that mimics the disinterestedness of scholarly expertise, they appear with spinning globes or dozens of screens behind them as if they’re omniscient, they adorn their publications in fancy black-letter typefaces and give them names like “Sentinel” and “Observer” and “Inquirer” and “Plain Dealer”, they invented for themselves the title of “journalists” as if they take part in some kind of peer review process… But why do these silly tricks work? […] what makes the press “the press” is the little game of make-believe we play where an English or Acting major puts on a suit, talks with a funny cadence in his voice, sits in a movie set that looks like God’s Control Room, or writes in a certain format, using pseudo-academic language and symbols, and calls himself a “journalist” and we all pretend this person is somehow qualified to tell us what is going on in the world.

Even when the “journalist” is saying things we agree with, why do we participate in this ridiculous charade? […] I’m not against punditry or people putting together a platform to talk about things that happen. I’m against people with few skills other than “good storyteller” or “good writer” doing this while painting themselves as “can be trusted to tell you everything you need to know about anything”. […] Inasumuch as what I’m doing can be called “defending” them, I’d “defend” them not because they are providing us with valuable facts (ha!) but because they don’t owe us facts, or anything coherent, in the first place. It’s not like they’re some kind of official facts-providing service. They just put on clothes to look like one.”

…

iii. Chatham house rule.

…

iv. Sex Determination: Why So Many Ways of Doing It?

“Sexual reproduction is an ancient feature of life on earth, and the familiar X and Y chromosomes in humans and other model species have led to the impression that sex determination mechanisms are old and conserved. In fact, males and females are determined by diverse mechanisms that evolve rapidly in many taxa. Yet this diversity in primary sex-determining signals is coupled with conserved molecular pathways that trigger male or female development. Conflicting selection on different parts of the genome and on the two sexes may drive many of these transitions, but few systems with rapid turnover of sex determination mechanisms have been rigorously studied. Here we survey our current understanding of how and why sex determination evolves in animals and plants and identify important gaps in our knowledge that present exciting research opportunities to characterize the evolutionary forces and molecular pathways underlying the evolution of sex determination.”

…

v. So Good They Can’t Ignore You.

“Cal Newport’s 2012 book So Good They Can’t Ignore You is a career strategy book designed around four ideas.

The first idea is that ‘follow your passion’ is terrible career advice, and people who say this should be shot don’t know what they’re talking about. […] The second idea is that instead of believing in the passion hypothesis, you should adopt what Newport calls the ‘craftsman mindset’. The craftsman mindset is that you should focus on gaining rare and valuable skills, since this is what leads to good career outcomes.

The third idea is that autonomy is the most important component of a ‘dream’ job. Newport argues that when choosing between two jobs, there are compelling reasons to ‘always’ pick the one with higher autonomy over the one with lower autonomy.

The fourth idea is that having a ‘mission’ or a ‘higher purpose’ in your job is probably a good idea, and is really nice if you can find it. […] the book structure is basically: ‘following your passion is bad, instead go for Mastery[,] Autonomy and Purpose — the trio of things that have been proven to motivate knowledge workers’.” […]

“Newport argues that applying deliberate practice to your chosen skill market is your best shot at becoming ‘so good they can’t ignore you’. The key is to stretch — you want to practice skills that are just above your current skill level, so that you experience discomfort — but not too much discomfort that you’ll give up.” […]

“Newport thinks that if your job has one or more of the following qualities, you should leave your job in favour of another where you can build career capital:

- Your job presents few opportunities to distinguish yourself by developing relevant skills that are rare and valuable.

- Your job focuses on something you think is useless or perhaps even actively bad for the world.

- Your job forces you to work with people you really dislike.

If you’re in a job with any of these traits, your ability to gain rare and valuable skills would be hampered. So it’s best to get out.”

…

vi. Structural brain imaging correlates of general intelligence in UK Biobank.

“The association between brain volume and intelligence has been one of the most regularly-studied—though still controversial—questions in cognitive neuroscience research. The conclusion of multiple previous meta-analyses is that the relation between these two quantities is positive and highly replicable, though modest (Gignac & Bates, 2017; McDaniel, 2005; Pietschnig, Penke, Wicherts, Zeiler, & Voracek, 2015), yet its magnitude remains the subject of debate. The most recent meta-analysis, which included a total sample size of 8036 participants with measures of both brain volume and intelligence, estimated the correlation at r = 0.24 (Pietschnig et al., 2015). A more recent re-analysis of the meta-analytic data, only including healthy adult samples (N = 1758), found a correlation of r = 0.31 (Gignac & Bates, 2017). Furthermore, the correlation increased as a function of intelligence measurement quality: studies with better-quality intelligence tests—for instance, those including multiple measures and a longer testing time—tended to produce even higher correlations with brain volume (up to 0.39). […] Here, we report an analysis of data from a large, single sample with high-quality MRI measurements and four diverse cognitive tests. […] We judge that the large N, study homogeneity, and diversity of cognitive tests relative to previous large scale analyses provides important new evidence on the size of the brain structure-intelligence correlation. By investigating the relations between general intelligence and characteristics of many specific regions and subregions of the brain in this large single sample, we substantially exceed the scope of previous meta-analytic work in this area. […]

“We used a large sample from UK Biobank (N = 29,004, age range = 44–81 years). […] This preregistered study provides a large single sample analysis of the global and regional brain correlates of a latent factor of general intelligence. Our study design avoids issues of publication bias and inconsistent cognitive measurement to which meta-analyses are susceptible, and also provides a latent measure of intelligence which compares favourably with previous single-indicator studies of this type. We estimate the correlation between total brain volume and intelligence to be r = 0.276, which applies to both males and females. Multiple global tissue measures account for around double the variance in g in older participants, relative to those in middle age. Finally, we find that associations with intelligence were strongest in frontal, insula, anterior and medial temporal, lateral occipital and paracingulate cortices, alongside subcortical volumes (especially the thalamus) and the microstructure of the thalamic radiations, association pathways and forceps minor.”

…

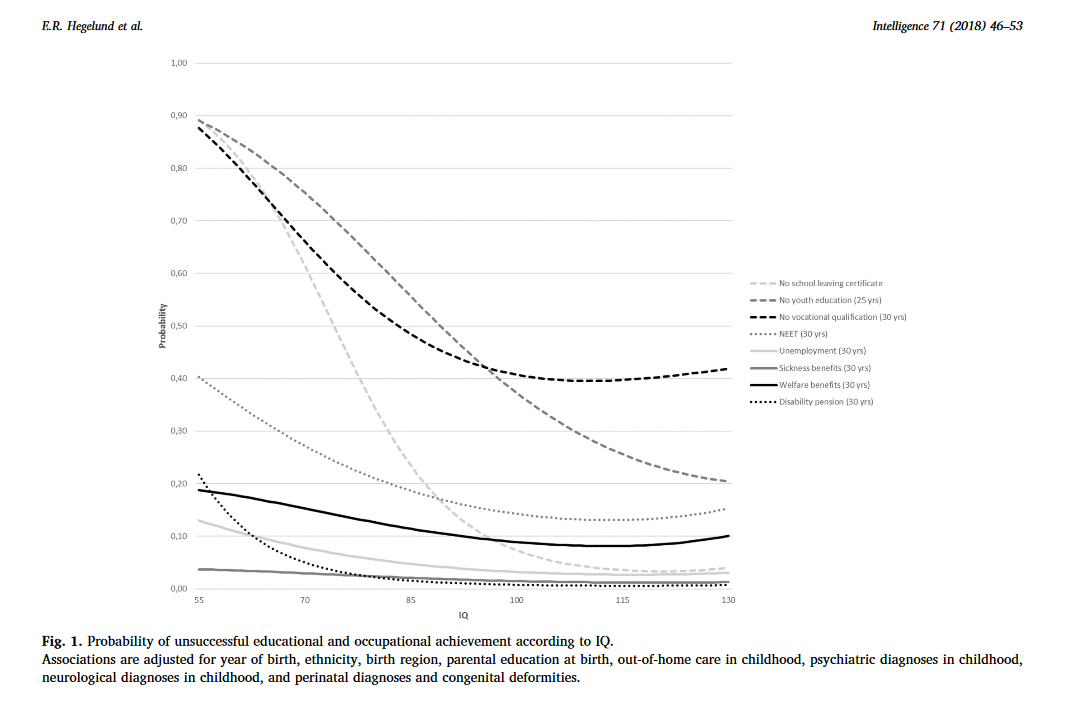

vii. Another IQ study: Low IQ as a predictor of unsuccessful educational and occupational achievement: A register-based study of 1,098,742 men in Denmark 1968–2016.

“Intelligence test score is a well-established predictor of educational and occupational achievement worldwide […]. Longitudinal studies typically report cor-relation coefficients of 0.5–0.6 between intelligence and educational achievement as assessed by educational level or school grades […], correlation coefficients of 0.4–0.5 between intelligence and occupational level […] and cor-relation coefficients of 0.2–0.4 between intelligence and income […]. Although the above-mentioned associations are well-established, low intelligence still seems to be an overlooked problem among young people struggling to complete an education or gain a foothold in the labour market […] Due to contextual differences with regard to educational system and flexibility and security on the labour market as well as educational and labour market policies, the role of intelligence in predicting unsuccessful educational and occupational courses may vary among countries. As Denmark has free admittance to education at all levels, state financed student grants for all students, and a relatively high support of students with special educational needs, intelligence might be expected to play a larger role – as socioeconomic factors might be of less importance – with regard to educational and occupational achievement compared with countries outside Scandinavia. The aim of this study was therefore to investigate the role of IQ in predicting a wide range of indicators of unsuccessful educational and occupational achievement among young people born across five decades in Denmark.”

“Individuals who differed in IQ score were found to differ with regard to all indicators of unsuccessful educational and occupational achievement such that low IQ was associated with a higher proportion of unsuccessful educational and occupational achievement. For example, among the 12.1% of our study population who left lower secondary school without receiving a certificate, 39.7% had an IQ < 80 and 23.1% had an IQ of 80–89, although these individuals only accounted for 7.8% and 13.1% of the total study population. The main analyses showed that IQ was inversely associated with all indicators of unsuccessful educational and occupational achievement in young adulthood after adjustment for covariates […] With regard to unsuccessful educational achievement, […] the probabilities of no school leaving certificate, no youth education at age 25, and no vocational qualification at age 30 decreased with increasing IQ in a cubic relation, suggesting essentially no or only weak associations at superior IQ levels. IQ had the strongest influence on the probability of no school leaving certificate. Although the probabilities of the three outcome indicators were almost the same among individuals with extremely low IQ, the probability of no school leaving certificate approached zero among individuals with an IQ of 100 or above whereas the probabilities of no youth education at age 25 and no vocational qualification at age 30 remained notably higher. […] individuals with an IQ of 70 had a median gross income of 301,347 DKK, individuals with an IQ of 100 had a median gross income of 331,854, and individuals with an IQ of 130 had a median gross income of 363,089 DKK – in the beginning of June 2018 corresponding to about 47,856 USD, 52,701 USD, and 57,662 USD, respectively. […] The results showed that among individuals undergoing education, low IQ was associated with a higher hazard rate of passing to employment, unemployment, sickness benefits receipt and welfare benefits receipt […]. This indicates that individuals with low IQ tend to leave the educational system to find employment at a younger age than individuals with high IQ, but that this early leave from the educational system often is associated with a transition into unemployment, sickness benefits receipt and welfare benefits receipt.”

“Conclusions

This study of 1,098,742 Danish men followed in national registers from 1968 to 2016 found that low IQ was a strong and consistent predictor of 10 indicators of unsuccessful educational and occupational achievement in young adulthood. Overall, it seemed that IQ had the strongest influence on the risk of unsuccessful educational achievement and on the risk of disability pension, and that the influence of IQ on educational achievement was strongest in the early educational career and decreased over time. At the community level our findings suggest that intelligence should be considered when planning interventions to reduce the rates of early school leaving and the unemployment rates and at the individual level our findings suggest that assessment of intelligence may provide crucial information for the counselling of poor-functioning schoolchildren and adolescents with regard to both the immediate educational goals and the more distant work-related future.”

Dyslexia (I)

A few years back I started out on another publication edited by the same author, the Wiley-Blackwell publication The Science of Reading: A Handbook. That book is dense and in the end I decided it wasn’t worth it to finish it – but I also learned from reading it that Snowling, the author of this book, probably knows her stuff. This book only covers a limited range of the literature on reading, but an interesting one.

I have added some quotes and links from the first chapters of the book below.

…

“Literacy difficulties, when they are not caused by lack of education, are known as dyslexia. Dyslexia can be defined as a problem with learning which primarily affects the development of reading accuracy and fluency and spelling skills. Dyslexia frequently occurs together with other difficulties, such as problems in attention, organization, and motor skills (movement) but these are not in and of themselves indicators of dyslexia. […] at the core of the problem is a difficulty in decoding words for reading and encoding them for spelling. Fluency in these processes is never achieved. […] children with specific reading difficulties show a poor response to reading instruction […] ‘response to intervention’ has been proposed as a better way of identifying likely dyslexic difficulties than measured reading skills. […] To this day, there is tension between the medical model of ‘dyslexia’ and the understanding of ‘specific learning difficulties’ in educational circles. The nub of the problem for the concept of dyslexia is that, unlike measles or chicken pox, it is not a disorder with a clear diagnostic profile. Rather, reading skills are distributed normally in the population […] dyslexia is like high blood pressure, there is no precise cut-off between high blood pressure and ‘normal’ blood pressure, but if high blood pressure remains untreated, the risk of complications is high. Hence, a diagnosis of ‘hypertension’ is warranted […] this book will show that there is remarkable agreement among researchers regarding the risk factors for poor reading and a growing number of evidence-based interventions: dyslexia definitely exists and we can do a great deal to ameliorate its effects”.

“An obvious though not often acknowledged fact is that literacy builds on a foundation of spoken language—indeed, an assumption of all education systems is that, when a child starts school, their spoken language is sufficient to support reading development. […] many children start school with considerable knowledge about books: they know that print runs from left to right (at least if you are reading English) and that you read from the front to the back of the book; and they are familiar with at least some letter names or sounds. At a basic level, reading involves translating printed symbols into pronunciations—a task referred to as decoding, which requires mapping across modalities from vision (written forms) to audition (spoken sounds). Beyond knowing letters, the beginning reader has to discover how printed words relate to spoken words and a major aim of reading instruction is to help the learner to ‘crack’ this code. To decode in English (and other alphabetic languages) requires learning about ‘grapheme–phoneme’ correspondences—literally the way in which letters or letter combinations relate to the speech sounds of spoken words: this is not a trivial task. When children use language naturally, they have only implicit knowledge of the words they use and they do not pay attention to their sounds; but this is precisely what they need to do in order to learn to decode. Indeed, they have to become ‘aware’ that words can be broken down into constituent parts like the syllable […] and that, in turn, syllables can be segmented into phonemes […]. Phonemes are the smallest sounds which differentiate words; for example, ‘pit’ and ‘bit’ differ by a single phoneme [b]-[p] (in fact, both are referred to as ‘stop consonants’ and they differ only by a single phonemic feature, namely the timing of the voicing onset of the consonant). In the English writing system, phonemes are the units which are coded in the grapheme-correspondences that make up the orthographic code.”

“The term ‘phoneme awareness‘ refers to the ability to reflect on and manipulate the speech sounds in words. It is a metalinguistic skill (a skill requiring conscious control of language) which develops after the ability to segment words into syllables and into rhyming parts […]. There has been controversy over whether phoneme awareness is a cause or a consequence of learning to read. […] In general, letters are easier to learn (being concrete) than phoneme awareness is to acquire (being an abstract skill). […] The acquisition of ‘phoneme awareness’ is a critical step in the development of decoding skills. A typical reader who possesses both letter knowledge and phoneme awareness can readily ‘sound out’ letters and blend the sounds together to read words or even meaningless but pronounceable letter strings (nonwords); conversely, they can split up words (segment them) into sounds for spelling. When these building blocks are in place, a child has developed ‘alphabetic competence’ and the task of becoming a reader can begin properly. […[ Another factor which is important in promoting reading fluency is the size of a child’s vocabulary. […] children with poor oral language skills, specifically limited semantic knowledge of words, [have e.g. been shown to have] particular difficulty in reading irregular words. […] Essentially, reading is a ‘big data’ problem—the task of learning involves extracting the statistical relationships between spelling (orthography) and sound (phonology) and using these to develop an algorithm for reading which is continually refined as further words are encountered.”

“It is commonly believed that spelling is simply the reverse of reading. It is not. As a consequence, learning to read does not always bring with it spelling proficiency. One reason is that the correspondences between letters and sounds used for reading (grapheme–phoneme correspondences) are not just the same as the sound-to-letter rules used for writing (phoneme–grapheme correspondences). Indeed, in English, the correspondences used in reading are generally more consistent than those used in spelling […] many of the early spelling errors children make replicate errors observed in speech development […] Children with dyslexia often struggle to spell words phonetically […] The relationship between phoneme awareness and letter knowledge at age 4 and phonological accuracy of spelling attempts at age 5 has been studied longitudinally with the aim of understanding individual differences in children’s spelling skills. As expected, these two components of alphabetic knowledge predicted the phonological accuracy of children’s early spelling. In turn, children’s phonological spelling accuracy along with their reading skill at this early stage predicted their spelling proficiency after three years in school. The findings suggest that the ability to transcode phonologically provides a foundation for the development of orthographic representations for spelling but this alone is not enough—information acquired from reading experience is required to ensure spellings are conventionally correct. […] for spelling as for reading, practice is important.”

“Irrespective of the language, reading involves mapping between the visual symbols of words and their phonological forms. What differs between languages is the nature of the symbols and the phonological units. Indeed, the mappings which need to be created are at different levels of ‘grain size’ in different languages (fine-grained in alphabets which connect letters and sounds like German or Italian, and more coarse-grained in logographic systems like Chinese that map between characters and syllabic units). Languages also differ in the complexity of their morphology and how this maps to the orthography. Among the alphabetic languages, English is the least regular, particularly for spelling; the most regular is Finnish with a completely transparent system of mappings between letters and phonemes […]. The term ‘orthographic depth’ is used to describe the level of regularity which is observed between languages — English is opaque (or deep), followed by Danish and French which also contain many irregularities, while Spanish and Italian rank among the more regular, transparent (or shallow) orthographies. Over the years, there has been much discussion as to whether children learning to read English have a particularly tough task and there is frequent speculation that dyslexia is more prevalent in English than in other languages. There is no evidence that this is the case. But what is clear is that it takes longer to become a fluent reader of English than of a more transparent language […] There are reasons other than orthographic consistency which make languages easier or harder to learn. One of these is the number of symbols in the writing system: the European languages have fewer than 35 while others have as many as 2,500. For readers of languages with extensive symbolic systems like Chinese, which has more than 2,000 characters, learning can be expected to continue through the middle and high school years. The visual-spatial complexity of the symbols may add further to the burden of learning. […] when there are more symbols in a writing system, the learning demands increase. […] Languages also differ importantly in the ways they represent phonology and meaning.”

“Given the many differences between languages and writing systems, there is remarkable similarity between the predictors of individual differences in reading across languages. The ELDEL study showed that for children reading alphabetic languages there are three significant predictors of growth in reading in the early years of schooling. These are letter knowledge, phoneme awareness, and rapid naming (a test in which the names of colours or objects have to be produced as quickly as possible in response to a random array of such items). Researchers have shown that a similar set of skills predict reading in Chinese […] However, there are also additional predictors that are language-specific. […] visual memory and visuo-spatial skills are stronger predictors of learning to read in a visually complex writing system, such as Chinese or Kannada, than they are for English. Moreover, there is emerging evidence of reciprocal relations – that learning to read in a complex orthography hones visuo-spatial abilities just as phoneme awareness improves as English children learn to read.”

“Children differ in the rate at which they learn to read and spell and children with dyslexia are typically the slowest to do so, assuming standard instruction for all. Indeed, it is clear from the outset that they have more difficulty in learning letters (by name or by sound) than their peers. As we have seen, letter knowledge is a crucial component of alphabetic competence and also offers a way into spelling. So for the dyslexic child with poor letter knowledge, learning to read and spell is compromised from the outset. In addition, there is a great deal of evidence that children with dyslexia have problems with phonological aspects of language from an early age and specifically, acquiring phonological awareness. […] The result is usually a significant problem in decoding—in fact, poor decoding is the hallmark of dyslexia, the signature of which is a nonword reading deficit. In the absence of remediation, this decoding difficulty persists and for many reading becomes something to be avoided. […] the most common pattern of reading deficit in dyslexia is an inability to read ‘new’ or unfamiliar words in the face of better developed word-reading skills — sometimes referred to as ‘phonological dyslexia’. […] Spelling poses a significant challenge to children with dyslexia. This seems inevitable, given their problems with phoneme awareness and decoding. The early spelling attempts of children with dyslexia are typically not phonetic in the way that their peers’ attempts are; rather, they are often difficult to decipher and best described as bizarre. […] errors continue to reflect profound difficulties in representing the sounds of words […] most people with dyslexia continue to show poor spelling through development and there is a very high correlation between (poor) spelling in the teenage years and (poor) spelling in middle age. […] While poor decoding can be a barrier to reading comprehension, many children and adults with dyslexia can read with adequate understanding when this is required but it takes them considerable time to do so, and they tend to avoid writing when it is possible to do so.”

…

Links:

Phonics.

History of dyslexia research. Samuel Orton. Rudolf Berlin. Anna Gillingham. Orton-Gillingham(-Stillman) approach. Thomas Richard Miles.

Seidenberg & McClelland’s triangle model.

“The Simple View of Reading”.

The lexical quality hypothesis (Perfetti & Hart). Matthew effect.

ELDEL project.

Diacritical mark.

Hiragana.

Phonetic radicals.

Morphogram.

Random stuff

i. Your Care Home in 120 Seconds. Some quotes:

“In order to get an overall estimate of mental power, psychologists have chosen a series of tasks to represent some of the basic elements of problem solving. The selection is based on looking at the sorts of problems people have to solve in everyday life, with particular attention to learning at school and then taking up occupations with varying intellectual demands. Those tasks vary somewhat, though they have a core in common.

Most tests include Vocabulary, examples: either asking for the definition of words of increasing rarity; or the names of pictured objects or activities; or the synonyms or antonyms of words.

Most tests include Reasoning, examples: either determining which pattern best completes the missing cell in a matrix (like Raven’s Matrices); or putting in the word which completes a sequence; or finding the odd word out in a series.

Most tests include visualization of shapes, examples: determining the correspondence between a 3-D figure and alternative 2-D figures; determining the pattern of holes that would result from a sequence of folds and a punch through folded paper; determining which combinations of shapes are needed to fill a larger shape.

Most tests include episodic memory, examples: number of idea units recalled across two or three stories; number of words recalled from across 1 to 4 trials of a repeated word list; number of words recalled when presented with a stimulus term in a paired-associate learning task.

Most tests include a rather simple set of basic tasks called Processing Skills. They are rather humdrum activities, like checking for errors, applying simple codes, and checking for similarities or differences in word strings or line patterns. They may seem low grade, but they are necessary when we try to organise ourselves to carry out planned activities. They tend to decline with age, leading to patchy, unreliable performance, and a tendency to muddled and even harmful errors. […]

A brain scan, for all its apparent precision, is not a direct measure of actual performance. Currently, scans are not as accurate in predicting behaviour as is a simple test of behaviour. This is a simple but crucial point: so long as you are willing to conduct actual tests, you can get a good understanding of a person’s capacities even on a very brief examination of their performance. […] There are several tests which have the benefit of being quick to administer and powerful in their predictions.[..] All these tests are good at picking up illness related cognitive changes, as in diabetes. (Intelligence testing is rarely criticized when used in medical settings). Delayed memory and working memory are both affected during diabetic crises. Digit Symbol is reduced during hypoglycaemia, as are Digits Backwards. Digit Symbol is very good at showing general cognitive changes from age 70 to 76. Again, although this is a limited time period in the elderly, the decline in speed is a notable feature. […]

The most robust and consistent predictor of cognitive change within old age, even after control for all the other variables, was the presence of the APOE e4 allele. APOE e4 carriers showed over half a standard deviation more general cognitive decline compared to noncarriers, with particularly pronounced decline in their Speed and numerically smaller, but still significant, declines in their verbal memory.

It is rare to have a big effect from one gene. Few people carry it, and it is not good to have.“

…

ii. What are common mistakes junior data scientists make?

Apparently the OP had second thoughts about this query so s/he deleted the question and marked the thread nsfw (??? …nothing remotely nsfw in that thread…). Fortunately the replies are all still there, there are quite a few good responses in the thread. I added some examples below:

“I think underestimating the domain/business side of things and focusing too much on tools and methodology. As a fairly new data scientist myself, I found myself humbled during this one project where I had I spent a lot of time tweaking parameters and making sure the numbers worked just right. After going into a meeting about it became clear pretty quickly that my little micro-optimizations were hardly important, and instead there were X Y Z big picture considerations I was missing in my analysis.”

[…]

-

Forgetting to check how actionable the model (or features) are. It doesn’t matter if you have amazing model for cancer prediction, if it’s based on features from tests performed as part of the post-mortem. Similarly, predicting account fraud after the money has been transferred is not going to be very useful.

-

Emphasis on lack of understanding of the business/domain.

-

Lack of communication and presentation of the impact. If improving your model (which is a quarter of the overall pipeline) by 10% in reducing customer churn is worth just ~100K a year, then it may not be worth putting into production in a large company.

-

Underestimating how hard it is to productionize models. This includes acting on the models outputs, it’s not just “run model, get score out per sample”.

-

Forgetting about model and feature decay over time, concept drift.

-

Underestimating the amount of time for data cleaning.

-

Thinking that data cleaning errors will be complicated.

-

Thinking that data cleaning will be simple to automate.

-

Thinking that automation is always better than heuristics from domain experts.

- Focusing on modelling at the expense of [everything] else”

“unhealthy attachments to tools. It really doesn’t matter if you use R, Python, SAS or Excel, did you solve the problem?”

“Starting with actual modelling way too soon: you’ll end up with a model that’s really good at answering the wrong question.

First, make sure that you’re trying to answer the right question, with the right considerations. This is typically not what the client initially told you. It’s (mainly) a data scientist’s job to help the client with formulating the right question.”

…

iii. Some random wikipedia links: Ottoman–Habsburg wars. Planetshine. Anticipation (genetics). Cloze test. Loop quantum gravity. Implicature. Starfish Prime. Stall (fluid dynamics). White Australia policy. Apostatic selection. Deimatic behaviour. Anti-predator adaptation. Lefschetz fixed-point theorem. Hairy ball theorem. Macedonia naming dispute. Holevo’s theorem. Holmström’s theorem. Sparse matrix. Binary search algorithm. Battle of the Bismarck Sea.

…

iv. 5-HTTLPR: A Pointed Review. This one is hard to quote, you should read all of it. I did however decide to add a few quotes from the post, as well as a few quotes from the comments:

“…what bothers me isn’t just that people said 5-HTTLPR mattered and it didn’t. It’s that we built whole imaginary edifices, whole castles in the air on top of this idea of 5-HTTLPR mattering. We “figured out” how 5-HTTLPR exerted its effects, what parts of the brain it was active in, what sorts of things it interacted with, how its effects were enhanced or suppressed by the effects of other imaginary depression genes. This isn’t just an explorer coming back from the Orient and claiming there are unicorns there. It’s the explorer describing the life cycle of unicorns, what unicorns eat, all the different subspecies of unicorn, which cuts of unicorn meat are tastiest, and a blow-by-blow account of a wrestling match between unicorns and Bigfoot.

This is why I start worrying when people talk about how maybe the replication crisis is overblown because sometimes experiments will go differently in different contexts. The problem isn’t just that sometimes an effect exists in a cold room but not in a hot room. The problem is more like “you can get an entire field with hundreds of studies analyzing the behavior of something that doesn’t exist”. There is no amount of context-sensitivity that can help this. […] The problem is that the studies came out positive when they shouldn’t have. This was a perfectly fine thing to study before we understood genetics well, but the whole point of studying is that, once you have done 450 studies on something, you should end up with more knowledge than you started with. In this case we ended up with less. […] I think we should take a second to remember that yes, this is really bad. That this is a rare case where methodological improvements allowed a conclusive test of a popular hypothesis, and it failed badly. How many other cases like this are there, where there’s no geneticist with a 600,000 person sample size to check if it’s true or not? How many of our scientific edifices are built on air? How many useless products are out there under the guise of good science? We still don’t know.”

A few more quotes from the comment section of the post:

“most things that are obviously advantageous or deleterious in a major way aren’t gonna hover at 10%/50%/70% allele frequency.

Population variance where they claim some gene found in > [non trivial]% of the population does something big… I’ll mostly tend to roll to disbelieve.

But if someone claims a family/village with a load of weirdly depressed people (or almost any other disorder affecting anything related to the human condition in any horrifying way you can imagine) are depressed because of a genetic quirk… believable but still make sure they’ve confirmed it segregates with the condition or they’ve got decent backing.

And a large fraction of people have some kind of rare disorder […]. Long tail. Lots of disorders so quite a lot of people with something odd.

It’s not that single variants can’t have a big effect. It’s that really big effects either win and spread to everyone or lose and end up carried by a tiny minority of families where it hasn’t had time to die out yet.

Very few variants with big effect sizes are going to be half way through that process at any given time.

Exceptions are

1: mutations that confer resistance to some disease as a tradeoff for something else […] 2: Genes that confer a big advantage against something that’s only a very recent issue.”

“I think the summary could be something like:

A single gene determining 50% of the variance in any complex trait is inherently atypical, because variance depends on the population plus environment and the selection for such a gene would be strong, rapidly reducing that variance.

However, if the environment has recently changed or is highly variable, or there is a trade-off against adverse effects it is more likely.

Furthermore – if the test population is specifically engineered to target an observed trait following an apparently Mendelian inheritance pattern – such as a family group or a small genetically isolated population plus controls – 50% of the variance could easily be due to a single gene.”

…

“The most over-used and under-analyzed statement in the academic vocabulary is surely “more research is needed”. These four words, occasionally justified when they appear as the last sentence in a Masters dissertation, are as often to be found as the coda for a mega-trial that consumed the lion’s share of a national research budget, or that of a Cochrane review which began with dozens or even hundreds of primary studies and progressively excluded most of them on the grounds that they were “methodologically flawed”. Yet however large the trial or however comprehensive the review, the answer always seems to lie just around the next empirical corner.

With due respect to all those who have used “more research is needed” to sum up months or years of their own work on a topic, this ultimate academic cliché is usually an indicator that serious scholarly thinking on the topic has ceased. It is almost never the only logical conclusion that can be drawn from a set of negative, ambiguous, incomplete or contradictory data.” […]

“Here is a quote from a typical genome-wide association study:

“Genome-wide association (GWA) studies on coronary artery disease (CAD) have been very successful, identifying a total of 32 susceptibility loci so far. Although these loci have provided valuable insights into the etiology of CAD, their cumulative effect explains surprisingly little of the total CAD heritability.” [1]

The authors conclude that not only is more research needed into the genomic loci putatively linked to coronary artery disease, but that – precisely because the model they developed was so weak – further sets of variables (“genetic, epigenetic, transcriptomic, proteomic, metabolic and intermediate outcome variables”) should be added to it. By adding in more and more sets of variables, the authors suggest, we will progressively and substantially reduce the uncertainty about the multiple and complex gene-environment interactions that lead to coronary artery disease. […] We predict tomorrow’s weather, more or less accurately, by measuring dynamic trends in today’s air temperature, wind speed, humidity, barometric pressure and a host of other meteorological variables. But when we try to predict what the weather will be next month, the accuracy of our prediction falls to little better than random. Perhaps we should spend huge sums of money on a more sophisticated weather-prediction model, incorporating the tides on the seas of Mars and the flutter of butterflies’ wings? Of course we shouldn’t. Not only would such a hyper-inclusive model fail to improve the accuracy of our predictive modeling, there are good statistical and operational reasons why it could well make it less accurate.”

…

vi. Why software projects take longer than you think – a statistical model.

“Anyone who built software for a while knows that estimating how long something is going to take is hard. It’s hard to come up with an unbiased estimate of how long something will take, when fundamentally the work in itself is about solving something. One pet theory I’ve had for a really long time, is that some of this is really just a statistical artifact.

Let’s say you estimate a project to take 1 week. Let’s say there are three equally likely outcomes: either it takes 1/2 week, or 1 week, or 2 weeks. The median outcome is actually the same as the estimate: 1 week, but the mean (aka average, aka expected value) is 7/6 = 1.17 weeks. The estimate is actually calibrated (unbiased) for the median (which is 1), but not for the the mean.

A reasonable model for the “blowup factor” (actual time divided by estimated time) would be something like a log-normal distribution. If the estimate is one week, then let’s model the real outcome as a random variable distributed according to the log-normal distribution around one week. This has the property that the median of the distribution is exactly one week, but the mean is much larger […] Intuitively the reason the mean is so large is that tasks that complete faster than estimated have no way to compensate for the tasks that take much longer than estimated. We’re bounded by 0, but unbounded in the other direction.”

I like this way to conceptually frame the problem, and I definitely do not think it only applies to software development.

“I filed this in my brain under “curious toy models” for a long time, occasionally thinking that it’s a neat illustration of a real world phenomenon I’ve observed. But surfing around on the interwebs one day, I encountered an interesting dataset of project estimation and actual times. Fantastic! […] The median blowup factor turns out to be exactly 1x for this dataset, whereas the mean blowup factor is 1.81x. Again, this confirms the hunch that developers estimate the median well, but the mean ends up being much higher. […]

If my model is right (a big if) then here’s what we can learn:

- People estimate the median completion time well, but not the mean.

- The mean turns out to be substantially worse than the median, due to the distribution being skewed (log-normally).

- When you add up the estimates for n tasks, things get even worse.

- Tasks with the most uncertainty (rather the biggest size) can often dominate the mean time it takes to complete all tasks.”

…

vii. Attraction inequality and the dating economy.

“…the relentless focus on inequality among politicians is usually quite narrow: they tend to consider inequality only in monetary terms, and to treat “inequality” as basically synonymous with “income inequality.” There are so many other types of inequality that get air time less often or not at all: inequality of talent, height, number of friends, longevity, inner peace, health, charm, gumption, intelligence, and fortitude. And finally, there is a type of inequality that everyone thinks about occasionally and that young single people obsess over almost constantly: inequality of sexual attractiveness. […] One of the useful tools that economists use to study inequality is the Gini coefficient. This is simply a number between zero and one that is meant to represent the degree of income inequality in any given nation or group. An egalitarian group in which each individual has the same income would have a Gini coefficient of zero, while an unequal group in which one individual had all the income and the rest had none would have a Gini coefficient close to one. […] Some enterprising data nerds have taken on the challenge of estimating Gini coefficients for the dating “economy.” […] The Gini coefficient for [heterosexual] men collectively is determined by [-ll-] women’s collective preferences, and vice versa. If women all find every man equally attractive, the male dating economy will have a Gini coefficient of zero. If men all find the same one woman attractive and consider all other women unattractive, the female dating economy will have a Gini coefficient close to one.”

“A data scientist representing the popular dating app “Hinge” reported on the Gini coefficients he had found in his company’s abundant data, treating “likes” as the equivalent of income. He reported that heterosexual females faced a Gini coefficient of 0.324, while heterosexual males faced a much higher Gini coefficient of 0.542. So neither sex has complete equality: in both cases, there are some “wealthy” people with access to more romantic experiences and some “poor” who have access to few or none. But while the situation for women is something like an economy with some poor, some middle class, and some millionaires, the situation for men is closer to a world with a small number of super-billionaires surrounded by huge masses who possess almost nothing. According to the Hinge analyst:

On a list of 149 countries’ Gini indices provided by the CIA World Factbook, this would place the female dating economy as 75th most unequal (average—think Western Europe) and the male dating economy as the 8th most unequal (kleptocracy, apartheid, perpetual civil war—think South Africa).”

Btw., I’m reasonably certain “Western Europe” as most people think of it is not average in terms of Gini, and that half-way down the list should rather be represented by some other region or country type, like, say Mongolia or Bulgaria. A brief look at Gini lists seemed to support this impression.

“Quartz reported on this finding, and also cited another article about an experiment with Tinder that claimed that that “the bottom 80% of men (in terms of attractiveness) are competing for the bottom 22% of women and the top 78% of women are competing for the top 20% of men.” These studies examined “likes” and “swipes” on Hinge and Tinder, respectively, which are required if there is to be any contact (via messages) between prospective matches. […] Yet another study, run by OkCupid on their huge datasets, found that women rate 80 percent of men as “worse-looking than medium,” and that this 80 percent “below-average” block received replies to messages only about 30 percent of the time or less. By contrast, men rate women as worse-looking than medium only about 50 percent of the time, and this 50 percent below-average block received message replies closer to 40 percent of the time or higher.

If these findings are to be believed, the great majority of women are only willing to communicate romantically with a small minority of men while most men are willing to communicate romantically with most women. […] It seems hard to avoid a basic conclusion: that the majority of women find the majority of men unattractive and not worth engaging with romantically, while the reverse is not true. Stated in another way, it seems that men collectively create a “dating economy” for women with relatively low inequality, while women collectively create a “dating economy” for men with very high inequality.”

…

I think the author goes a bit off the rails later in the post, but the data is interesting. It’s however important keeping in mind in contexts like these that sexual selection pressures apply at multiple levels, not just one, and that partner preferences can be non-trivial to model satisfactorily; for example as many women have learned the hard way, males may have very different standards for whom to a) ‘engage with romantically’ and b) ‘consider a long-term partner’.

…

viii. Flipping the Metabolic Switch: Understanding and Applying Health Benefits of Fasting.

“Intermittent fasting (IF) is a term used to describe a variety of eating patterns in which no or few calories are consumed for time periods that can range from 12 hours to several days, on a recurring basis. Here we focus on the physiological responses of major organ systems, including the musculoskeletal system, to the onset of the metabolic switch – the point of negative energy balance at which liver glycogen stores are depleted and fatty acids are mobilized (typically beyond 12 hours after cessation of food intake). Emerging findings suggest the metabolic switch from glucose to fatty acid-derived ketones represents an evolutionarily conserved trigger point that shifts metabolism from lipid/cholesterol synthesis and fat storage to mobilization of fat through fatty acid oxidation and fatty-acid derived ketones, which serve to preserve muscle mass and function. Thus, IF regimens that induce the metabolic switch have the potential to improve body composition in overweight individuals. […] many experts have suggested IF regimens may have potential in the treatment of obesity and related metabolic conditions, including metabolic syndrome and type 2 diabetes.(20)”

“In most studies, IF regimens have been shown to reduce overall fat mass and visceral fat both of which have been linked to increased diabetes risk.(139) IF regimens ranging in duration from 8 to 24 weeks have consistently been found to decrease insulin resistance.(12, 115, 118, 119, 122, 123, 131, 132, 134, 140) In line with this, many, but not all,(7) large-scale observational studies have also shown a reduced risk of diabetes in participants following an IF eating pattern.”

“…we suggest that future randomized controlled IF trials should use biomarkers of the metabolic switch (e.g., plasma ketone levels) as a measure of compliance and the magnitude of negative energy balance during the fasting period. It is critical for this switch to occur in order to shift metabolism from lipidogenesis (fat storage) to fat mobilization for energy through fatty acid β-oxidation. […] As the health benefits and therapeutic efficacies of IF in different disease conditions emerge from RCTs, it is important to understand the current barriers to widespread use of IF by the medical and nutrition community and to develop strategies for broad implementation. One argument against IF is that, despite the plethora of animal data, some human studies have failed to show such significant benefits of IF over CR [Calorie Restriction].(54) Adherence to fasting interventions has been variable, some short-term studies have reported over 90% adherence,(54) whereas in a one year ADMF study the dropout rate was 38% vs 29% in the standard caloric restriction group.(135)”

…

ix. Self-repairing cells: How single cells heal membrane ruptures and restore lost structures.

Artificial intelligence (I?)

This book was okay, but nothing all that special. In my opinion there’s too much philosophy and similar stuff in there (‘what does intelligence really mean anyway?’), and the coverage isn’t nearly as focused on technological aspects as e.g. Winfield’s (…in my opinion better…) book from the same series on robotics (which I covered here) was; I am certain I’d have liked this book better if it’d provided a similar type of coverage as did Winfield, but it didn’t. However it’s far from terrible and I liked the authors skeptical approach to e.g. singularitarianism. Below I have added some quotes and links, as usual.

…

“Artificial intelligence (AI) seeks to make computers do the sorts of things that minds can do. Some of these (e.g. reasoning) are normally described as ‘intelligent’. Others (e.g. vision) aren’t. But all involve psychological skills — such as perception, association, prediction, planning, motor control — that enable humans and animals to attain their goals. Intelligence isn’t a single dimension, but a richly structured space of diverse information-processing capacities. Accordingly, AI uses many different techniques, addressing many different tasks. […] although AI needs physical machines (i.e. computers), it’s best thought of as using what computer scientists call virtual machines. A virtual machine isn’t a machine depicted in virtual reality, nor something like a simulated car engine used to train mechanics. Rather, it’s the information-processing system that the programmer has in mind when writing a program, and that people have in mind when using it. […] Virtual machines in general are comprised of patterns of activity (information processing) that exist at various levels. […] the human mind can be understood as the virtual machine – or rather, the set of mutually interacting virtual machines, running in parallel […] – that is implemented in the brain. Progress in AI requires progress in defining interesting/useful virtual machines. […] How the information is processed depends on the virtual machine involved. [There are many different approaches.] […] In brief, all the main types of AI were being thought about, and even implemented, by the late 1960s – and in some cases, much earlier than that. […] Neural networks are helpful for modelling aspects of the brain, and for doing pattern recognition and learning. Classical AI (especially when combined with statistics) can model learning too, and also planning and reasoning. Evolutionary programming throws light on biological evolution and brain development. Cellular automata and dynamical systems can be used to model development in living organisms. Some methodologies are closer to biology than to psychology, and some are closer to non-reflective behaviour than to deliberative thought. To understand the full range of mentality, all of them will be needed […]. Many AI researchers [however] don’t care about how minds work: they seek technological efficiency, not scientific understanding. […] In the 21st century, […] it has become clear that different questions require different types of answers”.