Imitation Games – Avi Wigderson

…

If you wish to skip the introduction the talk starts at 5.20. The talk itself lasts roughly an hour, with the last ca. 20 minutes devoted to Q&A – that part is worth watching as well.

Some links related to the talk below:

Theory of computation.

Turing test.

COMPUTING MACHINERY AND INTELLIGENCE.

Probabilistic encryption & how to play mental poker keeping secret all partial information Goldwasser-Micali82.

Probabilistic algorithm

How To Generate Cryptographically Strong Sequences Of Pseudo-Random Bits (Blum&Micali, 1984)

Randomness extractor

Dense graph

Periodic sequence

Extremal graph theory

Szemerédi’s theorem

Green–Tao theorem

Szemerédi regularity lemma

New Proofs of the Green-Tao-Ziegler Dense Model Theorem: An Exposition

Calibrating Noise to Sensitivity in Private Data Analysis

Generalization in Adaptive Data Analysis and Holdout Reuse

Book: Math and Computation | Avi Wigderson

One-way function

Lattice-based cryptography

James Simons interview

James Simons. Differential geometry. Minimal varieties in riemannian manifolds. Shiing-Shen Chern. Characteristic Forms and Geometric Invariants. Renaissance Technologies.

“That’s really what’s great about basic science and in this case mathematics, I mean, I didn’t know any physics. It didn’t occur to me that this material, that Chern and I had developed would find use somewhere else altogether. This happens in basic science all the time that one guy’s discovery leads to someone else’s invention and leads to another guy’s machine or whatever it is. Basic science is the seed corn of our knowledge of the world. …I loved the subject, but I liked it for itself, I wasn’t thinking of applications. […] the government’s not doing such a good job at supporting basic science and so there’s a role for philanthropy, an increasingly important role for philanthropy.”

“My algorithm has always been: You put smart people together, you give them a lot of freedom, create an atmosphere where everyone talks to everyone else. They’re not hiding in the corner with their own little thing. They talk to everybody else. And you provide the best infrastructure. The best computers and so on that people can work with and make everyone partners.”

“We don’t have enough teachers of mathematics who know it, who know the subject … and that’s for a simple reason: 30-40 years ago, if you knew some mathematics, enough to teach in let’s say high school, there weren’t a million other things you could do with that knowledge. Oh yeah, maybe you could become a professor, but let’s suppose you’re not quite at that level but you’re good at math and so on.. Being a math teacher was a nice job. But today if you know that much mathematics, you can get a job at Google, you can get a job at IBM, you can get a job in Goldman Sachs, I mean there’s plenty of opportunities that are going to pay more than being a high school teacher. There weren’t so many when I was going to high school … so the quality of high school teachers in math has declined, simply because if you know enough to teach in high school you know enough to work for Google…”

The pleasure of finding things out (II)

Here’s my first post about the book. In this post I have included a few more quotes from the last half of the book.

…

“Are physical theories going to keep getting more abstract and mathematical? Could there be today a theorist like Faraday in the early nineteenth century, not mathematically sophisticated but with a very powerful intuition about physics?

Feynman: I’d say the odds are strongly against it. For one thing, you need the math just to understand what’s been done so far. Beyond that, the behavior of subnuclear systems is so strange compared to the ones the brain evolved to deal with that the analysis has to be very abstract: To understand ice, you have to understand things that are themselves very unlike ice. Faraday’s models were mechanical – springs and wires and tense bands in space – and his images were from basic geometry. I think we’ve understood all we can from that point of view; what we’ve found in this century is different enough, obscure enough, that further progress will require a lot of math.”

“There’s a tendency to pomposity in all this, to make it all deep and profound. My son is taking a course in philosophy, and last night we were looking at something by Spinoza – and there was the most childish reasoning! There were all these Attributes, and Substances, all this meaningless chewing around, and we started to laugh. Now, how could we do that? Here’s this great Dutch philosopher, and we’re laughing at him. It’s because there was no excuse for it! In that same period there was Newton, there was Harvey studying the circulation of the blood, there were people with methods of analysis by which progress was being made! You can take every one of Spinoza’s propositions, and take the contrary propositions, and look at the world – and you can’t tell which is right. Sure, people were awed because he had the courage to take on these great questions, but it doesn’t do any good to have the courage if you can’t get anywhere with the question. […] It isn’t the philosophy that gets me, it’s the pomposity. If they’d just laugh at themselves! If they’d just say, “I think it’s like this, but von Leipzig thought it was like that, and he had a good shot at it, too.” If they’d explain that this is their best guess … But so few of them do”.

“The lesson you learn as you grow older in physics is that what we can do is a very small fraction of what there is. Our theories are really very limited.”

“The first principle is that you must not fool yourself – and you are the easiest person to fool. So you have to be very careful about that. After you’ve not fooled yourself, it’s easy not to fool other scientists. You just have to be honest in a conventional way after that.”

“When I was an undergraduate I worked with Professor Wheeler* as a research assistant, and we had worked out together a new theory about how light worked, how the interaction between atoms in different places worked; and it was at that time an apparently interesting theory. So Professor Wigner†, who was in charge of the seminars there [at Princeton], suggested that we give a seminar on it, and Professor Wheeler said that since I was a young man and hadn’t given seminars before, it would be a good opportunity to learn how to do it. So this was the first technical talk that I ever gave. I started to prepare the thing. Then Wigner came to me and said that he thought the work was important enough that he’d made special invitations to the seminar to Professor Pauli, who was a great professor of physics visiting from Zurich; to Professor von Neumann, the world’s greatest mathematician; to Henry Norris Russell, the famous astronomer; and to Albert Einstein, who was living near there. I must have turned absolutely white or something because he said to me, “Now don’t get nervous about it, don’t be worried about it. First of all, if Professor Russell falls asleep, don’t feel bad, because he always falls asleep at lectures. When Professor Pauli nods as you go along, don’t feel good, because he always nods, he has palsy,” and so on. That kind of calmed me down a bit”.

“Well, for the problem of understanding the hadrons and the muons and so on, I can see at the present time no practical applications at all, or virtually none. In the past many people have said that they could see no applications and then later they found applications. Many people would promise under those circumstances that something’s bound to be useful. However, to be honest – I mean he looks foolish; saying there will never be anything useful is obviously a foolish thing to do. So I’m going to be foolish and say these damn things will never have any application, as far as I can tell. I’m too dumb to see it. All right? So why do you do it? Applications aren’t the only thing in the world. It’s interesting in understanding what the world is made of. It’s the same interest, the curiosity of man that makes him build telescopes. What is the use of discovering the age of the universe? Or what are these quasars that are exploding at long distances? I mean what’s the use of all that astronomy? There isn’t any. Nonetheless, it’s interesting. So it’s the same kind of exploration of our world that I’m following and it’s curiosity that I’m satisfying. If human curiosity represents a need, the attempt to satisfy curiosity, then this is practical in the sense that it is that. That’s the way I would look at it at the present time. I would not put out any promise that it would be practical in some economic sense.”

“To science we also bring, besides the experiment, a tremendous amount of human intellectual attempt at generalization. So it’s not merely a collection of all those things which just happen to be true in experiments. It’s not just a collection of facts […] all the principles must be as wide as possible, must be as general as possible, and still be in complete accord with experiment, that’s the challenge. […] Evey one of the concepts of science is on a scale graduated somewhere between, but at neither end of, absolute falsity or absolute truth. It is necessary, I believe, to accept this idea, not only for science, but also for other things; it is of great value to acknowledge ignorance. It is a fact that when we make decisions in our life, we don’t necessarily know that we are making them correctly; we only think that we are doing the best we can – and that is what we should do.”

“In this age of specialization, men who thoroughly know one field are often incompetent to discuss another.”

“I believe that moral questions are outside of the scientific realm. […] The typical human problem, and one whose answer religion aims to supply, is always of the following form: Should I do this? Should we do this? […] To answer this question we can resolve it into two parts: First – If I do this, what will happen? – and second – Do I want that to happen? What would come of it of value – of good? Now a question of the form: If I do this, what will happen? is strictly scientific. […] The technique of it, fundamentally, is: Try it and see. Then you put together a large amount of information from such experiences. All scientists will agree that a question – any question, philosophical or other – which cannot be put into the form that can be tested by experiment (or, in simple terms, that cannot be put into the form: If I do this, what will happen?) is not a scientific question; it is outside the realm of science.”

The pleasure of finding things out (I?)

As I put it in my goodreads review of the book, “I felt in good company while reading this book“. Some of the ideas in the book are by now well known, for example some of the interview snippets also included in the book have been added to youtube and have been viewed by hundreds of thousands of people (I added a couple of them to my ‘about’ page some years ago, and they’re still there, these are enjoyable videos to watch and they have aged well!) (the overlap between the book’s text and the sound recordings available is not 100 % for this material, but it’s close enough that I assume these were the same interviews). Others ideas and pieces I would assume to be less well known, for example Feynman’s encounter with Uri Geller in the latter’s hotel room, where he was investigating the latter’s supposed abilities related to mind reading and key bending..

I have added some sample quotes from the book below. It’s a good book, recommended.

…

“My interest in science is to simply find out about the world, and the more I find out the better it is, like, to find out. […] You see, one thing is, I can live with doubt and uncertainty and not knowing. I think it’s much more interesting to live not knowing than to have answers which might be wrong. I have approximate answers and possible beliefs and different degrees of certainty about different things, but I’m not absolutely sure of anything and there are many things I don’t know anything about […] I don’t have to know an answer, I don’t feel frightened by not knowing things, by being lost in a mysterious universe without having any purpose, which is the way it really is so far as I can tell. It doesn’t frighten me.”

“Some people look at the activity of the brain in action and see that in many respects it surpasses the computer of today, and in many other respects the computer surpasses ourselves. This inspires people to design machines that can do more. What often happens is that an engineer has an idea of how the brain works (in his opinion) and then designs a machine that behaves that way. This new machine may in fact work very well. But, I must warn you that that does not tell us anything about how the brain actually works, nor is it necessary to ever really know that, in order to make a computer very capable. It is not necessary to understand the way birds flap their wings and how the feathers are designed in order to make a flying machine. It is not necessary to understand the lever system in the legs of a cheetah – an animal that runs fast – in order to make an automobile with wheels that goes very fast. It is therefore not necessary to imitate the behavior of Nature in detail in order to engineer a device which can in many respects surpass Nature’s abilities.”

“These ideas and techniques [of scientific investigation] , of course, you all know. I’ll just review them […] The first is the matter of judging evidence – well, the first thing really is, before you begin you must not know the answer. So you begin by being uncertain as to what the answer is. This is very, very important […] The question of doubt and uncertainty is what is necessary to begin; for if you already know the answer there is no need to gather any evidence about it. […] We absolutely must leave room for doubt or there is no progress and there is no learning. There is no learning without having to pose a question. And a question requires doubt. […] Authority may be a hint as to what the truth is, but it is not the source of information. As long as it’s possible, we should disregard authority whenever the observations disagree with it. […] Science is the belief in the ignorance of experts.”

“If we look away from the science and look at the world around us, we find out something rather pitiful: that the environment that we live in is so actively, intensely unscientific. Galileo could say: “I noticed that Jupiter was a ball with moons and not a god in the sky. Tell me, what happened to the astrologers?” Well, they print their results in the newspapers, in the United States at least, in every daily paper every day. Why do we still have astrologers? […] There is always some crazy stuff. There is an infinite amount of crazy stuff, […] the environment is actively, intensely unscientific. There is talk about telepathy still, although it’s dying out. There is faith-healing galore, all over. There is a whole religion of faith-healing. There’s a miracle at Lourdes where healing goes on. Now, it might be true that astrology is right. It might be true that if you go to the dentist on the day that Mars is at right angles to Venus, that it is better than if you go on a different day. It might be true that you can be cured by the miracle of Lourdes. But if it is true it ought to be investigated. Why? To improve it. If it is true then maybe we can find out if the stars do influence life; that we could make the system more powerful by investigating statistically, scientifically judging the evidence objectively, more carefully. If the healing process works at Lourdes, the question is how far from the site of the miracle can the person, who is ill, stand? Have they in fact made a mistake and the back row is really not working? Or is it working so well that there is plenty of room for more people to be arranged near the place of the miracle? Or is it possible, as it is with the saints which have recently been created in the United States–there is a saint who cured leukemia apparently indirectly – that ribbons that are touched to the sheet of the sick person (the ribbon having previously touched some relic of the saint) increase the cure of leukemia–the question is, is it gradually being diluted? You may laugh, but if you believe in the truth of the healing, then you are responsible to investigate it, to improve its efficiency and to make it satisfactory instead of cheating. For example, it may turn out that after a hundred touches it doesn’t work anymore. Now it’s also possible that the results of this investigation have other consequences, namely, that nothing is there.”

“I believe that a scientist looking at nonscientific problems is just as dumb as the next guy – and when he talks about a nonscientific matter, he will sound as naive as anyone untrained in the matter.”

“If we want to solve a problem that we have never solved before, we must leave the door to the unknown ajar.”

“For a successful technology, reality must take precedence over public relations, for nature cannot be fooled.”

“I would like to say a word or two […] about words and definitions, because it is necessary to learn the words. It is not science. That doesn’t mean just because it is not science that we don’t have to teach the words. We are not talking about what to teach; we are talking about what science is. It is not science to know how to change centigrade to Fahrenheit. It’s necessary, but it is not exactly science. […] I finally figured out a way to test whether you have taught an idea or you have only taught a definition. Test it this way: You say, “Without using the new word which you have just learned, try to rephrase what you have just learned in your own language.”

“My father dealt a little bit with energy and used the term after I got a little bit of the idea about it. […] He would say, “It [a toy dog] moves because the sun is shining,” […]. I would say “No. What has that to do with the sun shining? It moved because I wound up the springs.” “And why, my friend, are you able to move to wind up this spring?” “I eat.” “What, my friend, do you eat?” “I eat plants.” “And how do they grow?” “They grow because the sun is shining.” […] The only objection in this particular case was that this was the first lesson. It must certainly come later, telling you what energy is, but not to such a simple question as “What makes a [toy] dog move?” A child should be given a child’s answer. “Open it up; let’s look at it.””

“Now the point of this is that the result of observation, even if I were unable to come to the ultimate conclusion, was a wonderful piece of gold, with a marvelous result. It was something marvelous. Suppose I were told to observe, to make a list, to write down, to do this, to look, and when I wrote my list down, it was filed with 130 other lists in the back of a notebook. I would learn that the result of observation is relatively dull, that nothing much comes of it. I think it is very important – at least it was to me – that if you are going to teach people to make observations, you should show that something wonderful can come from them. […] [During my life] every once in a while there was the gold of a new understanding that I had learned to expect when I was a kid, the result of observation. For I did not learn that observation was not worthwhile. […] The world looks so different after learning science. For example, the trees are made of air, primarily. When they are burned, they go back to air, and in the flaming heat is released the flaming heat of the sun which was bound in to convert the air into trees, and in the ash is the small remnant of the part which did not come from air, that came from the solid earth, instead. These are beautiful things, and the content of science is wonderfully full of them. They are very inspiring, and they can be used to inspire others.”

“Physicists are trying to find out how nature behaves; they may talk carelessly about some “ultimate particle” because that’s the way nature looks at a given moment, but . . . Suppose people are exploring a new continent, OK? They see water coming along the ground, they’ve seen that before, and they call it “rivers.” So they say they’re exploring to find the headwaters, they go upriver, and sure enough, there they are, it’s all going very well. But lo and behold, when they get up far enough they find the whole system’s different: There’s a great big lake, or springs, or the rivers run in a circle. You might say, “Aha! They’ve failed!” but not at all! The real reason they were doing it was to explore the land. If it turned out not to be headwaters, they might be slightly embarrassed at their carelessness in explaining themselves, but no more than that. As long as it looks like the way things are built is wheels within wheels, then you’re looking for the innermost wheel – but it might not be that way, in which case you’re looking for whatever the hell it is that you find!”

Quotes

i. “The party that negotiates in haste is often at a disadvantage.” (Howard Raiffa)

ii. “Advice: don’t embarrass your bargaining partner by forcing him or her to make all the concessions.” (-ll-)

iii. “Disputants often fare poorly when they each act greedily and deceptively.” (-ll-)

iv. “Each man does seek his own interest, but, unfortunately, not according to the dictates of reason.” (Kenneth Waltz)

v. “Whatever is said after I’m gone is irrelevant.” (Jimmy Savile)

vi. “Trust is an important lubricant of a social system. It is extremely efficient; it saves a lot of trouble to have a fair degree of reliance on other people’s word. Unfortunately this is not a commodity which can be bought very easily. If you have to buy it, you already have some doubts about what you have bought.” (Kenneth Arrow)

vii. “… an author never does more damage to his readers than when he hides a difficulty.” (Évariste Galois)

viii. “A technical argument by a trusted author, which is hard to check and looks similar to arguments known to be correct, is hardly ever checked in detail” (Vladimir Voevodsky)

ix. “Suppose you want to teach the “cat” concept to a very young child. Do you explain that a cat is a relatively small, primarily carnivorous mammal with retractible claws, a distinctive sonic output, etc.? I’ll bet not. You probably show the kid a lot of different cats, saying “kitty” each time, until it gets the idea. To put it more generally, generalizations are best made by abstraction from experience. They should come one at a time; too many at once overload the circuits.” (Ralph P. Boas Jr.)

x. “Every author has several motivations for writing, and authors of technical books always have, as one motivation, the personal need to understand; that is, they write because they want to learn, or to understand a phenomenon, or to think through a set of ideas.” (Albert Wymore)

xi. “Great mathematics is achieved by solving difficult problems not by fabricating elaborate theories in search of a problem.” (Harold Davenport)

xii. “Is science really gaining in its assault on the totality of the unsolved? As science learns one answer, it is characteristically true that it also learns several new questions. It is as though science were working in a great forest of ignorance, making an ever larger circular clearing within which, not to insist on the pun, things are clear… But as that circle becomes larger and larger, the circumference of contact with ignorance also gets longer and longer. Science learns more and more. But there is an ultimate sense in which it does not gain; for the volume of the appreciated but not understood keeps getting larger. We keep, in science, getting a more and more sophisticated view of our essential ignorance.” (Warren Weaver)

xiii. “When things get too complicated, it sometimes makes sense to stop and wonder: Have I asked the right question?” (Enrico Bombieri)

xiv. “The mean and variance are unambiguously determined by the distribution, but a distribution is, of course, not determined by its mean and variance: A number of different distributions have the same mean and the same variance.” (Richard von Mises)

xv. “Algorithms existed for at least five thousand years, but people did not know that they were algorithmizing. Then came Turing (and Post and Church and Markov and others) and formalized the notion.” (Doron Zeilberger)

xvi. “When a problem seems intractable, it is often a good idea to try to study “toy” versions of it in the hope that as the toys become increasingly larger and more sophisticated, they would metamorphose, in the limit, to the real thing.” (-ll-)

xvii. “The kind of mathematics foisted on children in schools is not meaningful, fun, or even very useful. This does not mean that an individual child cannot turn it into a valuable and enjoyable personal game. For some the game is scoring grades; for others it is outwitting the teacher and the system. For many, school math is enjoyable in its repetitiveness, precisely because it is so mindless and dissociated that it provides a shelter from having to think about what is going on in the classroom. But all this proves is the ingenuity of children. It is not a justifications for school math to say that despite its intrinsic dullness, inventive children can find excitement and meaning in it.” (Seymour Papert)

xviii. “The optimist believes that this is the best of all possible worlds, and the pessimist fears that this might be the case.” (Ivar Ekeland)

xix. “An equilibrium is not always an optimum; it might not even be good. This may be the most important discovery of game theory.” (-ll-)

xx. “It’s not all that rare for people to suffer from a self-hating monologue. Any good theories about what’s going on there?”

“If there’s things you don’t like about your life, you can blame yourself, or you can blame others. If you blame others and you’re of low status, you’ll be told to cut that out and start blaming yourself. If you blame yourself and you can’t solve the problems, self-hate is the result.” (Nancy Lebovitz & ‘The Nybbler’)

Einstein quotes

“Einstein emerges from this collection of quotes, drawn from many different sources, as a complete and fully rounded human being […] Knowledge of the darker side of Einstein’s life makes his achievement in science and in public affairs even more miraculous. This book shows him as he was – not a superhuman genius but a human genius, and all the greater for being human.”

…

I’ve recently read The Ultimate Quotable Einstein, from the foreword of which the above quote is taken, which contains roughly 1600 quotes by or about Albert Einstein; most of the quotes are by Einstein himself, but the book also includes more than 50 pages towards the end of the book containing quotes by others about him. I was probably not in the main target group, but I do like good quote collections and I figured there might be enough good quotes in the book for it to make sense for me to give it a try. On the other hand after having read the foreword by Freeman Dyson I knew there would probably be a lot of quotes in the book which I probably wouldn’t find too interesting; I’m not really sure why I should give a crap if/why a guy who died more than 60 years ago and whom I have never met and never will was having an affair during the early 1920s, or why I should care what Einstein thought about his mother or his ex-wife, but if that kind of stuff interests you the book has stuff about those kinds of things as well. My own interest in Einstein, such as it is, is mainly in ‘Einstein the scientist’ (and perhaps also in this particular context ‘Einstein the aphorist’), not ‘Einstein the father’ or ‘Einstein the husband’. I also don’t find the political views which he held to be very interesting, but again if you want to know what Einstein thought about things like Zionism, pacifism, and world government the book includes quotes about such topics as well.

Overall I should say that I was a little underwhelmed by the book and the quotes it includes, but I would also note that people who are interested in knowing more about Einstein will likely find a lot of valuable source material here, and that I did give the book 3 stars on goodreads. I did learn a lot of new things about Einstein by reading the book, but this is not surprising given how little I knew about him before I started reading the book; for example I had no idea that he was offered the presidency of Israel a few years before his death. I noticed only two quotes which were included more than once (a quote on pages 187-188 was repeated on page 453, and a quote on page 295 was repeated on page 455), and although I cannot guarantee that there aren’t any other repeats almost all quotes included in the book are unique, in the sense that they’re only included once in the coverage. However it should also be mentioned in this context that there are a few quotes on specific themes which are very similar to other quotes included elsewhere in the coverage. I do consider this unavoidable considering the number of quotes included, though.

I have included some sample quotes from the book below – I have tried to include quotes on a wide variety of topics. All quotes without a source below are sourced quotes by Einstein (the book also contains a small collection of quotes ‘attributed to Einstein’, many of which are either not sourced or sourced in such a manner that Calaprice did not feel convinced that the quote was actually by Einstein – none of the quotes from that part of the book’s coverage are included below).

…

“When a blind beetle crawls over the surface of a curved branch, it doesn’t notice that the track it has covered is indeed curved. I was lucky enough to notice what the beetle didn’t notice.” (“in answer to his son Eduard’s question about why he is so famous, 1922.”)

“The most valuable thing a teacher can impart to children is not knowledge and understanding per se but a longing for knowledge and understanding” (see on a related note also Susan Engel’s book – US)

“Teaching should be such that what is offered is perceived as a valuable gift and not as a hard duty.”

“I am not prepared to accept all his conclusions, but I consider his work an immensely valuable contribution to the science of human behavior.” (Einstein said this about Sigmund Freud during an interview. Yeah…)

“I consider him the best of the living writers.” (on Bertrand Russell. Russell incidentally also admired Einstein immensely – the last part of the book, including quotes by others about Einstein, includes this one by him: “Of all the public figures that I have known, Einstein was the one who commanded my most wholehearted admiration.”)

“I cannot understand the passive response of the whole civilized world to this modern barbarism. Doesn’t the world see that Hitler is aiming for war?” (1933. Related link.)

“Children don’t heed the life experience of their parents, and nations ignore history. Bad lessons always have to be learned anew.”

“Few people are capable of expressing with equanimity opinions that differ from the prejudices of their social environment. Most people are even incapable of forming such opinions.”

“Sometimes one pays most for things one gets for nothing.”

“Thanks to my fortunate idea of introducing the relativity principle into physics, you (and others) now enormously overrate my scientific abilities, to the point where this makes me quite uncomfortable.” (To Arnold Sommerfeld, 1908)

“No fairer destiny could be allotted to any physical theory than that it should of itself point out the way to the introduction of a more comprehensive theory, in which it lives on as a limiting case.”

“Mother nature, or more precisely an experiment, is a resolute and seldom friendly referee […]. She never says “yes” to a theory; but only “maybe” under the best of circumstances, and in most cases simply “no”.”

“The aim of science is, on the one hand, a comprehension, as complete as possible, of the connection between the sense experiences in their totality, and, on the other hand, the accomplishment of this aim by the use of a minimum of primary concepts and relations.” A related quote from the book: “Although it is true that it is the goal of science to discover rules which permit the association and foretelling of facts, this is not its only aim. It also seeks to reduce the connections discovered to the smallest possible number of mutually independent conceptual elements. It is in this striving after the rational unification of the manifold that it encounters its greatest successes.”

“According to general relativity, the concept of space detached from any physical content does not exist. The physical reality of space is represented by a field whose components are continuous functions of four independent variables – the coordinates of space and time.”

“One thing I have learned in a long life: that all our science, measured against reality, is primitive and childlike – and yet it is the most precious thing we have.”

“”Why should I? Everybody knows me there” (upon being told by his wife to dress properly when going to the office). “Why should I? No one knows me there” (upon being told to dress properly for his first big conference).”

“Marriage is but slavery made to appear civilized.”

“Nothing is more destructive of respect for the government and the law of the land than passing laws that cannot be enforced.”

“Einstein would be one of the greatest theoretical physicists of all time even if he had not written a single line on relativity.” (Max Born)

“Einstein’s [violin] playing is excellent, but he does not deserve his world fame; there are many others just as good.” (“A music critic on an early 1920s performance, unaware that Einstein’s fame derived from physics, not music. Quoted in Reiser, Albert Einstein, 202-203″)

Quotes

i. “By all means think yourself big but don’t think everyone else small” (‘Notes on Flyleaf of Fresh ms. Book’, Scott’s Last Expedition. See also this).

ii. “The man who knows everyone’s job isn’t much good at his own.” (-ll-)

iii. “It is amazing what little harm doctors do when one considers all the opportunities they have” (Mark Twain, as quoted in the Oxford Handbook of Clinical Medicine, p.595).

iv. “A first-rate theory predicts; a second-rate theory forbids and a third-rate theory explains after the event.” (Aleksander Isaakovich Kitaigorodski)

v. “[S]ome of the most terrible things in the world are done by people who think, genuinely think, that they’re doing it for the best” (Terry Pratchett, Snuff).

vi. “That was excellently observ’d, say I, when I read a Passage in an Author, where his Opinion agrees with mine. When we differ, there I pronounce him to be mistaken.” (Jonathan Swift)

vii. “Death is nature’s master stroke, albeit a cruel one, because it allows genotypes space to try on new phenotypes.” (Quote from the Oxford Handbook of Clinical Medicine, p.6)

viii. “The purpose of models is not to fit the data but to sharpen the questions.” (Samuel Karlin)

ix. “We may […] view set theory, and mathematics generally, in much the way in which we view theoretical portions of the natural sciences themselves; as comprising truths or hypotheses which are to be vindicated less by the pure light of reason than by the indirect systematic contribution which they make to the organizing of empirical data in the natural sciences.” (Quine)

x. “At root what is needed for scientific inquiry is just receptivity to data, skill in reasoning, and yearning for truth. Admittedly, ingenuity can help too.” (-ll-)

xi. “A statistician carefully assembles facts and figures for others who carefully misinterpret them.” (Quote from Mathematically Speaking – A Dictionary of Quotations, p.329. Only source given in the book is: “Quoted in Evan Esar, 20,000 Quips and Quotes“)

xii. “A knowledge of statistics is like a knowledge of foreign languages or of algebra; it may prove of use at any time under any circumstances.” (Quote from Mathematically Speaking – A Dictionary of Quotations, p. 328. The source provided is: “Elements of Statistics, Part I, Chapter I (p.4)”).

xiii. “We own to small faults to persuade others that we have not great ones.” (Rochefoucauld)

xiv. “There is more self-love than love in jealousy.” (-ll-)

xv. “We should not judge of a man’s merit by his great abilities, but by the use he makes of them.” (-ll-)

xvi. “We should gain more by letting the world see what we are than by trying to seem what we are not.” (-ll-)

xvii. “Put succinctly, a prospective study looks for the effects of causes whereas a retrospective study examines the causes of effects.” (Quote from p.49 of Principles of Applied Statistics, by Cox & Donnelly)

xviii. “… he who seeks for methods without having a definite problem in mind seeks for the most part in vain.” (David Hilbert)

xix. “Give every man thy ear, but few thy voice” (Shakespeare).

xx. “Often the fear of one evil leads us into a worse.” (Nicolas Boileau-Despréaux)

The Nature of Statistical Evidence

Here’s my goodreads review of the book.

As I’ve observed many times before, a wordpress blog like mine is not a particularly nice place to cover mathematical topics involving equations and lots of Greek letters, so the coverage below will be more or less purely conceptual; don’t take this to mean that the book doesn’t contain formulas. Some parts of the book look like this:

That of course makes the book hard to blog, also for other reasons than just the fact that it’s typographically hard to deal with the equations. In general it’s hard to talk about the content of a book like this one without going into a lot of details outlining how you get from A to B to C – usually you’re only really interested in C, but you need A and B to make sense of C. At this point I’ve sort of concluded that when covering books like this one I’ll only cover some of the main themes which are easy to discuss in a blog post, and I’ve concluded that I should skip coverage of (potentially important) points which might also be of interest if they’re difficult to discuss in a small amount of space, which is unfortunately often the case. I should perhaps observe that although I noted in my goodreads review that in a way there was a bit too much philosophy and a bit too little statistics in the coverage for my taste, you should definitely not take that objection to mean that this book is full of fluff; a lot of that philosophical stuff is ‘formal logic’ type stuff and related comments, and the book in general is quite dense. As I also noted in the goodreads review I didn’t read this book as carefully as I might have done – for example I skipped a couple of the technical proofs because they didn’t seem to be worth the effort – and I’d probably need to read it again to fully understand some of the minor points made throughout the more technical parts of the coverage; so that’s of course a related reason why I don’t cover the book in a great amount of detail here – it’s hard work just to read the damn thing, to talk about the technical stuff in detail here as well would definitely be overkill even if it would surely make me understand the material better.

I have added some observations from the coverage below. I’ve tried to clarify beforehand which question/topic the quote in question deals with, to ease reading/understanding of the topics covered.

…

On how statistical methods are related to experimental science:

“statistical methods have aims similar to the process of experimental science. But statistics is not itself an experimental science, it consists of models of how to do experimental science. Statistical theory is a logical — mostly mathematical — discipline; its findings are not subject to experimental test. […] The primary sense in which statistical theory is a science is that it guides and explains statistical methods. A sharpened statement of the purpose of this book is to provide explanations of the senses in which some statistical methods provide scientific evidence.”

On mathematics and axiomatic systems (the book goes into much more detail than this):

“It is not sufficiently appreciated that a link is needed between mathematics and methods. Mathematics is not about the world until it is interpreted and then it is only about models of the world […]. No contradiction is introduced by either interpreting the same theory in different ways or by modeling the same concept by different theories. […] In general, a primitive undefined term is said to be interpreted when a meaning is assigned to it and when all such terms are interpreted we have an interpretation of the axiomatic system. It makes no sense to ask which is the correct interpretation of an axiom system. This is a primary strength of the axiomatic method; we can use it to organize and structure our thoughts and knowledge by simultaneously and economically treating all interpretations of an axiom system. It is also a weakness in that failure to define or interpret terms leads to much confusion about the implications of theory for application.”

It’s all about models:

“The scientific method of theory checking is to compare predictions deduced from a theoretical model with observations on nature. Thus science must predict what happens in nature but it need not explain why. […] whether experiment is consistent with theory is relative to accuracy and purpose. All theories are simplifications of reality and hence no theory will be expected to be a perfect predictor. Theories of statistical inference become relevant to scientific process at precisely this point. […] Scientific method is a practice developed to deal with experiments on nature. Probability theory is a deductive study of the properties of models of such experiments. All of the theorems of probability are results about models of experiments.”

But given a frequentist interpretation you can test your statistical theories with the real world, right? Right? Well…

“How might we check the long run stability of relative frequency? If we are to compare mathematical theory with experiment then only finite sequences can be observed. But for the Bernoulli case, the event that frequency approaches probability is stochastically independent of any sequence of finite length. […] Long-run stability of relative frequency cannot be checked experimentally. There are neither theoretical nor empirical guarantees that, a priori, one can recognize experiments performed under uniform conditions and that under these circumstances one will obtain stable frequencies.” [related link]

What should we expect to get out of mathematical and statistical theories of inference?

“What can we expect of a theory of statistical inference? We can expect an internally consistent explanation of why certain conclusions follow from certain data. The theory will not be about inductive rationality but about a model of inductive rationality. Statisticians are used to thinking that they apply their logic to models of the physical world; less common is the realization that their logic itself is only a model. Explanation will be in terms of introduced concepts which do not exist in nature. Properties of the concepts will be derived from assumptions which merely seem reasonable. This is the only sense in which the axioms of any mathematical theory are true […] We can expect these concepts, assumptions, and properties to be intuitive but, unlike natural science, they cannot be checked by experiment. Different people have different ideas about what “seems reasonable,” so we can expect different explanations and different properties. We should not be surprised if the theorems of two different theories of statistical evidence differ. If two models had no different properties then they would be different versions of the same model […] We should not expect to achieve, by mathematics alone, a single coherent theory of inference, for mathematical truth is conditional and the assumptions are not “self-evident.” Faith in a set of assumptions would be needed to achieve a single coherent theory.”

On disagreements about the nature of statistical evidence:

“The context of this section is that there is disagreement among experts about the nature of statistical evidence and consequently much use of one formulation to criticize another. Neyman (1950) maintains that, from his behavioral hypothesis testing point of view, Fisherian significance tests do not express evidence. Royall (1997) employs the “law” of likelihood to criticize hypothesis as well as significance testing. Pratt (1965), Berger and Selke (1987), Berger and Berry (1988), and Casella and Berger (1987) employ Bayesian theory to criticize sampling theory. […] Critics assume that their findings are about evidence, but they are at most about models of evidence. Many theoretical statistical criticisms, when stated in terms of evidence, have the following outline: According to model A, evidence satisfies proposition P. But according to model B, which is correct since it is derived from “self-evident truths,” P is not true. Now evidence can’t be two different ways so, since B is right, A must be wrong. Note that the argument is symmetric: since A appears “self-evident” (to adherents of A) B must be wrong. But both conclusions are invalid since evidence can be modeled in different ways, perhaps useful in different contexts and for different purposes. From the observation that P is a theorem of A but not of B, all we can properly conclude is that A and B are different models of evidence. […] The common practice of using one theory of inference to critique another is a misleading activity.”

Is mathematics a science?

“Is mathematics a science? It is certainly systematized knowledge much concerned with structure, but then so is history. Does it employ the scientific method? Well, partly; hypothesis and deduction are the essence of mathematics and the search for counter examples is a mathematical counterpart of experimentation; but the question is not put to nature. Is mathematics about nature? In part. The hypotheses of most mathematics are suggested by some natural primitive concept, for it is difficult to think of interesting hypotheses concerning nonsense syllables and to check their consistency. However, it often happens that as a mathematical subject matures it tends to evolve away from the original concept which motivated it. Mathematics in its purest form is probably not natural science since it lacks the experimental aspect. Art is sometimes defined to be creative work displaying form, beauty and unusual perception. By this definition pure mathematics is clearly an art. On the other hand, applied mathematics, taking its hypotheses from real world concepts, is an attempt to describe nature. Applied mathematics, without regard to experimental verification, is in fact largely the “conditional truth” portion of science. If a body of applied mathematics has survived experimental test to become trustworthy belief then it is the essence of natural science.”

Then what about statistics – is statistics a science?

“Statisticians can and do make contributions to subject matter fields such as physics, and demography but statistical theory and methods proper, distinguished from their findings, are not like physics in that they are not about nature. […] Applied statistics is natural science but the findings are about the subject matter field not statistical theory or method. […] Statistical theory helps with how to do natural science but it is not itself a natural science.”

…

I should note that I am, and have for a long time been, in broad agreement with the author’s remarks on the nature of science and mathematics above. Popper, among many others, discussed this topic a long time ago e.g. in The Logic of Scientific Discovery and I’ve basically been of the opinion that (‘pure’) mathematics is not science (‘but rather ‘something else’ … and that doesn’t mean it’s not useful’) for probably a decade. I’ve had a harder time coming to terms with how precisely to deal with statistics in terms of these things, and in that context the book has been conceptually helpful.

Below I’ve added a few links to other stuff also covered in the book:

Propositional calculus.

Kolmogorov’s axioms.

Neyman-Pearson lemma.

Radon-Nikodyn theorem. (not covered in the book, but the necessity of using ‘a Radon-Nikodyn derivative’ to obtain an answer to a question being asked was remarked upon at one point, and I had no clue what he was talking about – it seems that the stuff in the link was what he was talking about).

A very specific and relevant link: Berger and Wolpert (1984). The stuff about Birnbaum’s argument covered from p.24 (p.40) and forward is covered in some detail in the book. The author is critical of the model and explains in the book in some detail why that is. See also: On the foundations of statistical inference (Birnbaum, 1962).

Introduction to Systems Analysis: Mathematically Modeling Natural Systems (I)

“This book was originally developed alongside the lecture Systems Analysis at the Swiss Federal Institute of Technology (ETH) Zürich, on the basis of lecture notes developed over 12 years. The lecture, together with others on analysis, differential equations and linear algebra, belongs to the basic mathematical knowledge imparted on students of environmental sciences and other related areas at ETH Zürich. […] The book aims to be more than a mathematical treatise on the analysis and modeling of natural systems, yet a certain set of basic mathematical skills are still necessary. We will use linear differential equations, vector and matrix calculus, linear algebra, and even take a glimpse at nonlinear and partial differential equations. Most of the mathematical methods used are covered in the appendices. Their treatment there is brief however, and without proofs. Therefore it will not replace a good mathematics textbook for someone who has not encountered this level of math before. […] The book is firmly rooted in the algebraic formulation of mathematical models, their analytical solution, or — if solutions are too complex or do not exist — in a thorough discussion of the anticipated model properties.”

…

I finished the book yesterday – here’s my goodreads review (note that the first link in this post was not to the goodreads profile of the book for the reason that goodreads has listed the book under the wrong title). I’ve never read a book about ‘systems analysis’ before, but as I also mention in the goodreads review it turned out that much of this stuff was stuff I’d seen before. There are 8 chapters in the book. Chapter one is a brief introductory chapter, the second chapter contains a short overview of mathematical models (static models, dynamic models, discrete and continuous time models, stochastic models…), the third chapter is a brief chapter about static models (the rest of the book is about dynamic models, but they want you to at least know the difference), the fourth chapter deals with linear (differential equation) models with one variable, chapter 5 extends the analysis to linear models with several variables, chapter 6 is about non-linear models (covers e.g. the Lotka-Volterra model (of course) and the Holling-Tanner model (both were covered in Ecological Dynamics, in much more detail)), chapter 7 deals briefly with time-discrete models and how they are different from continuous-time models (I liked Gurney and Nisbet’s coverage of this stuff a lot better, as that book had a lot more details about these things) and chapter 8 concludes with models including both a time- and a space-dimension, which leads to coverage of concepts such as mixing and transformation, advection, diffusion and exchange in a model context.

How to derive solutions to various types of differential equations, how to calculate eigenvalues and what these tell you about the model dynamics (and how to deal with them when they’re imaginary), phase diagrams/phase planes and topographical maps of system dynamics, fixed points/steady states and their properties, what’s an attractor?, what’s hysteresis and in which model contexts might this phenomenon be present?, the difference between homogeneous and non-homogeneous differential equations and between first order- and higher-order differential equations, which role do the initial conditions play in various contexts?, etc. – it’s this kind of book. Applications included in the book are varied; some of the examples are (as already mentioned) derived from the field of ecology/mathematical biology (there are also e.g. models of phosphate distribution/dynamics in lakes and models of fish population dynamics), others are from chemistry (e.g. models dealing with gas exchange – Fick’s laws of diffusion are e.g. covered in the book, and they also talk about e.g. Henry’s law), physics (e.g. the harmonic oscillator, the Lorenz model) – there are even a few examples from economics (e.g. dealing with interest rates). As they put it in the introduction, “Although most of the examples used here are drawn from the environmental sciences, this book is not an introduction to the theory of aquatic or terrestrial environmental systems. Rather, a key goal of the book is to demonstrate the virtually limitless practical potential of the methods presented.” I’m not sure if they succeeded, but it’s certainly clear from the coverage that you can use the tools they cover in a lot of different contexts.

I’m not quite sure how much mathematics you’ll need to know in order to read and understand this book on your own. In the coverage they seem to me to assume some familiarity with linear algebra, multi-variable calculus, complex analysis (/related trigonometry) (perhaps also basic combinatorics – for example factorials are included without comments about how they work). You should probably take the authors at their words when they say above that the book “will not replace a good mathematics textbook for someone who has not encountered this level of math before”. A related observation is also that regardless of whether you’ve seen this sort of stuff before or not, this is probably not the sort of book you’ll be able to read in a day or two.

I think I’ll try to cover the book in more detail (with much more specific coverage of some main points) tomorrow.

A couple of abstracts

Abstract:

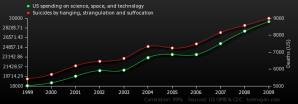

“There are both costs and benefits associated with conducting scientific- and technological research. Whereas the benefits derived from scientific research and new technologies have often been addressed in the literature (for a good example, see Evenson et al., 1979), few of the major non-monetary societal costs associated with major expenditures on scientific research and technology have however so far received much attention.

In this paper we investigate one of the major non-monetary societal cost variables associated with the conduct of scientific and technological research in the United States, namely the suicides resulting from research activities. In particular, in this paper we analyze the association between scientific- and technological research expenditure patterns and the number of suicides committed using one of the most common suicide methods, namely that of hanging, strangulation and suffocation (-HSS). We conclude from our analysis that there’s a very strong association between scientific research expenditures in the US and the frequency of suicides committed using the HSS method, and that this relationship has been stable for at least a decade. An important aspect in the context of the association is the precise mechanisms through which the increase in HHSs takes place. Although the mechanisms are still not well-elucidated, we suggest that one of the important components in this relationship may be judicial research, as initial analyses of related data have suggested that this variable may be important. We argue in the paper that our initial findings in this context provide impetus for considering this pathway a particularly important area of future research in this field.”

Graph 1:

…

Abstract:

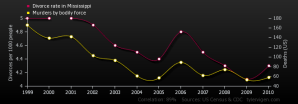

“Murders by bodily force (-Mbf) make up a substantial number of all homicides in the US. Previous research on the topic has shown that this criminal activity causes the compromise of some common key biological functions in victims, such as respiration and cardiac function, and that many people with close social relationships with the victims are psychosocially affected as well, which means that this societal problem is clearly of some importance.

Researchers have known for a long time that the marital state of the inhabitants of the state of Mississippi and the dynamics of this variable have important nation-wide effects. Previous research has e.g. analyzed how the marriage rate in Mississippi determines the US per capita consumption of whole milk. In this paper we investigate how the dynamics of Mississippian marital patterns relate to the national Mbf numbers. We conclude from our analysis that it is very clear that there’s a strong association between the divorce rate in Mississippi and the national level of Mbf. We suggest that the effect may go through previously established channels such as e.g. milk consumption, but we also note that the precise relationship has yet to be elucidated and that further research on this important topic is clearly needed.”

…

This abstract is awesome as well, but I didn’t write it…

…

The ‘funny’ part is that I could actually easily imagine papers not too dissimilar to the ones just outlined getting published in scientific journals. Indeed, in terms of the structure I’d claim that many published papers are exactly like this. They do significance testing as well, sure, but hunting down p-values is not much different from hunting down correlations and it’s quite easy to do both. If that’s all you have, you haven’t shown much.

The Structure of Scientific Revolutions

I read the book yesterday. Here’s what I wrote on goodreads:

“I’m not rating this, but I’ll note that ‘it’s an interesting model.’

I’d only really learned (…heard?) about Kuhn’s ideas through cultural osmosis (and/or perhaps a brief snippet of his work in HS? Maybe. I honestly can’t remember if we read Kuhn back then…). It’s worth actually reading the book, and I should probably have done that a long time ago.”

…

I was thinking about just quoting extensively from the work in this post in order to make clear what the book is about, but I’m not sure this is actually the best way to proceed. I know some readers of this blog have already read Kuhn, so it may in some sense be more useful if I say a little bit about what I think about the things he’s said, rather than focusing only on what he’s said in the work. I’ve tried to make this the sort of post that can be read and enjoyed both by people who have not read Kuhn, and by people who have, though I may not have been successful. That said, I have felt it necessary to include at least a few quotes from the work along the way in the following, in order not to misrepresent Kuhn too much.

So anyway, ‘the general model’ Kuhn has of science is one where there are three states of science. ‘Normal science’ is perhaps the most common state (this is actually not completely clear as I don’t think he ever explicitly says as much (I may be wrong), and the inclusion of concepts like ‘mini-revolutions’ (the ‘revolutions can happen on many levels’-part) makes things even less clear, but I don’t think this is an unreasonable interpretation), where scientists in a given field has adopted a given paradigm and work and tinker with stuff within that paradigm, exploring all the nooks and crannies: “‘normal science’ means research firmly based upon one or more past scientific achievements, achievements that some particular scientific community acknowledges for a time as supplying the foundation for it further practice.” Exactly what a paradigm is is still a bit unclear to me, as he seems to me to be using the term in a lot of different ways (“One sympathetic reader, who shares my conviction that ‘paradigm’ names the central philosophical elements of the book, prepared a partial analytic index and concluded that the term is used in at least twenty-two different ways.” – a quote from the postscript).

So there’s ‘normal science’, where everything is sort of proceeding according to plan. And then there are two other states: A state of crisis, and a state of revolution. A crisis state is a state which comes about when the scientists working in their nooks and crannies gradually come to realize that perhaps the model of the world they’ve been using (‘paradigm’) may not be quite right. Something is off, the model has problems explaining some of the results – so they start questioning some of the defining assumptions. During a crisis scientists become less constrained by the paradigm when looking at the world, research becomes in some sense more random; a lot of new ideas pop up as to how to deal with the problem(s), and at some point a scientific revolution resolves the crisis – a new model replaces the old one, and the scientists can go back to doing ‘normal science’ work, which is now defined by the new paradigm rather than the old one. Young people and/or people not too closely affiliated with the old model/paradigm are, Kuhn argues, more likely to come up with the new idea that will resolve the problem which caused the crisis, and young people and new people in the field are more likely than their older colleagues to ‘convert’ to the new way of thinking. Such dynamics are actually, he adds, part of what keeps ‘normal science’ going and makes it able to proceed in the manner it does; scientists are skeptical people, and if scientists were to question the basic assumptions of the field they’re working in all the time, they’d never be able to specialize in the way they do, exploring all the nooks and crannies; they’d be spending all their time arguing about the basics instead. It should be noted that crises don’t always lead to a resolution; sometimes the crisis can be resolved without it. He also argues that sometimes a revolution can take place without a major crisis, though the existence of such crises he seems to think important to his overall thesis. Crises and revolutions need not be the result of annoying data that does not fit – they may also be the result of e.g. technological advances, like the development of new tools and technology which can e.g. enable scientists to see things they did not use to be able to see. Sometimes the theory upon which a new paradigm is based was presented much earlier, during the ‘normal science’ phase, but nobody took the theory seriously back then because the problems that lead to crisis had not really manifested at that time.

Scientists make progress when they’re doing normal science, in the sense that they tend to learn a lot of new stuff about the world during these phases. But revolutions can both overturn some of that progress (‘that was not the right way to think about these things’), and it can lead to further progress and new knowledge. An important thing to note here is that how paradigms change is in part a sociological process; part of what leads to change is the popularity of different models. Kuhn argues that scientists tend to prefer new paradigms which solves many of the same problems the old paradigm did, as well as some of those troublesome problems which lead to the crisis – so it’s not like revolutions will necessarily lead people back to square one, with all the scientific progress made during the preceding ‘normal science’ period wiped out. But there are some problems. Textbooks, Kuhn argues, are written by the winners (i.e. the people who picked the right paradigm and get to write textbooks), and so they will often deliberately and systematically downplay the differences between the scientists working in the field now and the scientists working in the field – or what came before it (the fact that normal science is conducted at all is a sign of maturity of a field, Kuhn notes) – in the past, painting a picture of gradual, cumulative progress in the field (gigantum humeris insidentes) which perhaps is not the right way to think about what has actually happened. Sometimes a revolution will make scientists stop asking questions they used to ask, without any answer being provided by the new paradigm; there are costs as well as benefits associated with the dramatical change that takes place during scientific revolutions:

“In the process the community will sustain losses. Often some old problems must be banished. Frequently, in addition, revolution narrows the scope of the community’s professional concerns, increases the extent of its specialization, and attenuates its communication with other groups, both scientific and lay. Though science surely grows in depth, it may not grow in breadth as well. If it does so, that breadth is manifest mainly in the proliferation of scientific specialties, not in the scope of any single specialty alone. Yet despite these and other losses to the individual communities, the nature of such communities provides a virtual guarantee that both the list of problems solved by science and the precision of individual problem-solutions will grow and grow. At least, the nature of the community provides such a guarantee if there is any way at all in which it can be provided. What better criterion than the decision of the scientific group could there be?”

I quote this part also to focus in on an area where I am in disagreement with Kuhn – this relates to his implicit assumption that scientific paradigms (whatever that term may mean) are decided by scientists alone. Certainly this is not the case to the extent that the scientific paradigms equal the rules of the game for conducting science. This is actually one of several major problems I have with the model. Doing science requires money, and people who pay for the stuff will have their own ideas about what you can get away with asking questions about. What the people paying for the stuff have allowed scientists to investigate has changed over time, but some things have changed more than others and what might be termed ‘the broader cultural dimension’ seems important to me; those variables may play a very important role in deciding where science and scientists may or may not go, and although the book deals with sociological stuff in quite a bit of detail, the exclusion of broader cultural and political factors in the model is ‘a bit’ of a problem to me. Scientists are certainly not unconstrained today by such ‘external factors’, and/but most scientists alive today will not face anywhere near the same kinds of constraints on their research as their forebears living 300 years ago did – religion is but one of several elephants in the room (and that one is still really important in some parts of the world, though the role it plays may have changed).

Another big problem is how to test a model like this. Kuhn doesn’t try. He only talks about anecdotes; specific instances, examples which according to him illustrates a broader point. I’m not sure his model is completely stupid, but there are alternative ways to think about these things, including mental models with variables omitted from his model which likely lead to a better appreciation of the processes involved. Money and politics, culture/religion, coalition building and the dynamics of negotiation, things like that. How do institutions fit into all of this? These things have very important effects on how science is conducted, and the (near-)exclusion of them in a model of how to conceptualize the scientific process at least somewhat inspired by sociology and related stuff seems more than a bit odd to me. I’m also not completely clear on why this model is even useful, what it adds. You can presumably approximate pretty much any developmental process by some punctuated equilibrium model like this – it seems to me to be a bit like doing a Taylor expansion, if you add enough terms it’ll look plausible, especially if you add ‘crises’ as well to the model to explain the cases where no clear trend is observable. Stable development is normal science, discontinuities are revolutions, high-variance areas are crises; framed that way you suddenly realize that it’s very convenient indeed for Kuhn that crises don’t always lead to revolutions and that revolutions need not be preceded by crises – if those requirements were imposed on the other hand, the underling data-generating-process would at least be somewhat constrained by the model (though how to actually measure ‘progress’ and ‘variance’ are still questions in need of an answer). I know that the model outlined would not explain a set of completely randomly generated numbers, but in this context I think it would do quite well – even if it’s arguable if it has actually explained anything at all. Add to the model imprecise language – 22 definitions… – and the observation that the model builder seems to be cherry-picking examples to make specific points, what you end up with is, well…

The book was sort of interesting, but, yeah… I feel slightly tempted to revise my goodreads review after having written this post, but I’m not sure I will – it was worth reading the book and I probably should have done it a long time ago, even if only to learn what all the fuss was about (it’s my impression, which may be faulty, that this one is (‘considered to be’) one of the must-reads in this genre). Some of the hypotheses derived from the model seem perhaps to be more testable than others (‘young people are more likely to spark important development in a field’), but even in those cases things get messy (‘what do you mean by ‘important’ and who is to decide that? ‘how young?’). A problem with the model which I have not yet mentioned is incidentally that his model of how interactions between fields and the scientists in those fields take place and proceed to me seems to leave a lot to be desired; the model is very ‘field-centric’. How different fields (which are not about to combine into one), and the people working in them, interact with each other may be yet another very important variable not explored in the model.

As a historical narrative about a few specific important scientific events in the past, Kuhn’s account probably isn’t bad (and it has some interesting observations related to the history of science which I did not know). As ‘a general model of how science works’, well…

The Origin and Evolution of Cultures (V)

This will be my last post about the book. Go here for a background post and my overall impression of the book – I’ll limit this post to coverage of the ‘Simple Models of Complex Phenomena’-chapter which I mentioned in that post, as well as a few observations from the introduction to part 5 of the book, which talks a little bit about what the chapter is about in general terms. The stuff they write in the chapter is in a way a sort of overview over the kind of approach to things which you may well end up adopting unconsciously if you’re working in a field like economics or ecology and a defence of such an approach; I’ve as mentioned in the previous post about the book talked about these sorts of things before, but there’s some new stuff in here as well. The chapter is written in the context of Boyd and Richerson’s coverage of their ‘Darwinian approach to evolution’, but many of the observations here are of a much more general nature and relate to the application of statistical and mathematical modelling in a much broader context; and some of those observations that do not directly relate to broader contexts still do as far as I can see have what might be termed ‘generalized analogues’. The chapter coverage was actually interesting enough for me to seriously consider reading a book or two on these topics (books such as this one), despite the amount of work I know may well be required to deal with a book like this.

I exclude a lot of stuff from the chapter in this post, and there are a lot of other good chapters in the book. Again, you should read this book.

…

Here’s the stuff from the introduction:

“Chapter 19 is directed at those in the social sciences unfamiliar with a style of deploying mathematical models that is second nature to economists, evolutionary biologists, engineers, and others. Much science in many disciplines consists of a toolkit of very simple mathematical models. To many not familiar with the subtle art of the simple model, such formal exercises have two seemingly deadly flaws. First, they are not easy to follow. […] Second, motivation to follow the math is often wanting because the model is so cartoonishly simple relative to the real world being analyzed. Critics often level the charge ‘‘reductionism’’ with what they take to be devastating effect. The modeler’s reply is that these two criticisms actually point in opposite directions and sum to nothing. True, the model is quite simple relative to reality, but even so, the analysis is difficult. The real lesson is that complex phenomena like culture require a humble approach. We have to bite off tiny bits of reality to analyze and build up a more global knowledge step by patient step. […] Simple models, simple experiments, and simple observational programs are the best the human mind can do in the face of the awesome complexity of nature. The alternatives to simple models are either complex models or verbal descriptions and analysis. Complex models are sometimes useful for their predictive power, but they have the vice of being difficult or impossible to understand. The heuristic value of simple models in schooling our intuition about natural processes is exceedingly important, even when their predictive power is limited. […] Unaided verbal reasoning can be unreliable […] The lesson, we think, is that all serious students of human behavior need to know enough math to at least appreciate the contributions simple mathematical models make to the understanding of complex phenomena. The idea that social scientists need less math than biologists or other natural scientists is completely mistaken.”

And below I’ve posted the chapter coverage:

“A great deal of the progress in evolutionary biology has resulted from the deployment of relatively simple theoretical models. Staddon’s, Smith’s, and Maynard Smith’s contributions illustrate this point. Despite their success, simple models have been subjected to a steady stream of criticism. The complexity of real social and biological phenomena is compared to the toylike quality of the simple models used to analyze them and their users charged with unwarranted reductionism or plain simplemindedness.

This critique is intuitively appealing—complex phenomena would seem to require complex theories to understand them—but misleading. In this chapter we argue that the study of complex, diverse phenomena like organic evolution requires complex, multilevel theories but that such theories are best built from toolkits made up of a diverse collection of simple models. Because individual models in the toolkit are designed to provide insight into only selected aspects of the more complex whole, they are necessarily incomplete. Nevertheless, students of complex phenomena aim for a reasonably complete theory by studying many related simple models. The neo-Darwinian theory of evolution provides a good example: fitness-optimizing models, one and multiple locus genetic models, and quantitative genetic models all emphasize certain details of the evolutionary process at the expense of others. While any given model is simple, the theory as a whole is much more comprehensive than any one of them.”

“In the last few years, a number of scholars have attempted to understand the processes of cultural evolution in Darwinian terms […] The idea that unifies all this work is that social learning or cultural transmission can be modeled as a system of inheritance; to understand the macroscopic patterns of cultural change we must understand the microscopic processes that increase the frequency of some culturally transmitted variants and reduce the frequency of others. Put another way, to understand cultural evolution we must account for all of the processes by which cultural variation is transmitted and modified. This is the essence of the Darwinian approach to evolution.”

“In the face of the complexity of evolutionary processes, the appropriate strategy may seem obvious: to be useful, models must be realistic; they should incorporate all factors that scientists studying the phenomena know to be important. This reasoning is certainly plausible, and many scientists, particularly in economics […] and ecology […], have constructed such models, despite their complexity. On this view, simple models are primitive, things to be replaced as our sophistication about evolution grows. Nevertheless, theorists in such disciplines as evolutionary biology and economics stubbornly continue to use simple models even though improvements in empirical knowledge, analytical mathematics, and computing now enable them to create extremely elaborate models if they care to do so. Theorists of this persuasion eschew more detailed models because (1) they are hard to understand, (2) they are difficult to analyze, and (3) they are often no more useful for prediction than simple models. […] Detailed models usually require very large amounts of data to determine the various parameter values in the model. Such data are rarely available. Moreover, small inaccuracies or errors in the formulation of the model can produce quite erroneous predictions. The temptation is to ‘‘tune’’ the model, making small changes, perhaps well within the error of available data, so that the model produces reasonable answers. When this is done, any predictive power that the model might have is due more to statistical fitting than to the fact that it accurately represents actual causal processes. It is easy to make large sacrifices of understanding for small gains in predictive power.”