Quotes

i. “Every age has its peculiar folly; some scheme, project, or phantasy into which it plunges, spurred on either by the love of gain, the necessity of excitement, or the mere force of imitation. Failing in these, it has some madness, to which it is goaded by political or religious causes, or both combined.” (Charles Mackay)

ii. “Men, it has been well said, think in herds; it will be seen that they go mad in herds, while they only recover their senses slowly, and one by one.” (-ll-)

iii. “Credulity is always greatest in times of calamity.” (-ll-)

iv. “Women liked nothing better than making you defend yourself, and once you started, she had won.” (Robert Jordan, Knife of Dreams, Book Eleven of The Wheel of Time (p. 198)).

v. “….what adventure is worth leaving your wife to die alone?” (Robert Jordan, Knife of Dreams, Book Eleven of The Wheel of Time (p. 200)).

vi. “I have always been ready to die for her.” […] “Better to live for her, though, wouldn’t you say?” (Robert Jordan, Knife of Dreams, Book Eleven of The Wheel of Time (p. 816))

vii. “Being in control wasn’t so much about the power you had, but the power you implied that you had.” (Robert Jordan, The Gathering Storm, Book 12 of The Wheel of Time (p. 116))

viii. “He felt like going drinking, forgetting who he was — and who people thought he was — for a while. But if he got drunk, he was likely to let his face show by accident. Perhaps begin to talk about who he really was. You never could tell what a man would do when he was drunk, even if that man was your own self.” (Robert Jordan, Towers of Midnight, Book 13 of The Wheel of Time (p. 349))

ix. “He glanced at himself in the glass’s reflection, making certain the coat was straight. Small things were important. Seconds were small things, and if you heaped enough of those on top of one another, they became a man’s life.” (Robert Jordan, Towers of Midnight, Book 13 of The Wheel of Time (p. 679))

x. “There seems to be an idealistic form of geek thinking that holds that if only we made decisions better, we would never make mistakes. I was a young adherent, a worshipper at the altar of “If Only I Were Infinitely Smart.” Fortunately, I got over it. I learned the value of reversibility […] and realized the value of making decisions reversible. […] In general, we should treat reversible decisions differently than irreversible decisions. There’s great value in reviewing, double-checking, triple-checking irreversible decisions. The pace should be slow and deliberate. […] How about reversible decisions? Most software design decisions are easily reversible. […] we can so easily reverse a decision if it turns out to be wrong. Because there is so little value to avoiding mistakes, we shouldn’t invest much in doing so.” (Kent Beck, Tidy First? (pp. 103-105))

xi. “Software development and architecture have patterns and antipatterns that can be applied (or recognized) in writing code and architecting systems. A pattern is a reusable solution that has been shown to be effective when used to solve a problem. […] Antipatterns are not the direct opposite of patterns. They are solutions that look like they solve a problem but have consequences that outweigh any potential benefits. […] This book applies the concept of patterns and antipatterns to communication. People often quote Brian Foote and Joseph Yoder’s 1997 paper “Big Ball of Mud” (and for good reason): “If you think good architecture is expensive, try bad architecture.” It means that creating good architecture requires an investment, but not investing will result in bad architecture that costs more in the long run. The same thing should be said for communication: If you think good communication is expensive, try bad communication. Investing in good (successful) communication is less expensive than bearing the costs of bad (unsuccessful) communication.” (Jacqui Read, Communication Patterns (pp. 10-11)).

xii. “Mixing levels of abstraction is a communication antipattern that has a counterpart in the coding world. If you have ever coded, you will likely know mixing levels of abstraction as a sin or a code smell.2 Although putting all the information someone could need into one diagram might seem appropriate, this leads to clutter and confusion from the audience’s perspective. […] Using different levels of abstraction across multiple diagrams allows you to communicate appropriately for the audience, while still ensuring that all relevant information is captured. […] The C4 model is a hierarchy of abstractions. It uses an abstraction first approach (prioritizing abstraction and building everything else around it). […] C4 models, based around a hierarchy of abstractions, are an excellent way to illustrate the need to keep levels of abstraction separate in diagrams. This separation rule applies to all types of diagrams.” (ibid, pp. 28-33)

xiii. “It does make me feel guilty, the knight notes, that we have to treat them like this. They’re victims too.

It was truth, but one that Nomad had long ago made peace with. You didn’t always get to fight the right people. In fact, you often had to fight the wrong ones — at least until you could stop the men and women who gave the orders.” (Brandon Sanderson, The Sunlit Man, p. 327)

xiv. “The best of our fiction is by novelists who allow that it is as good as they can give, and the worst by novelists who maintain that they could do much better if only the public would let them.” (J. M. Barrie)

xv. “Never ascribe to an opponent motives meaner than your own.” (-ll-)

xvi. “I’m not young enough to know everything.” (-ll-)

xvii. “The life of every man is a diary in which he means to write one story, and writes another; and his humblest hour is when he compares the volume as it is with what he vowed to make it.” (-ll-)

xviii. “Shall we make a new rule of life from tonight: always to try to be a little kinder than is necessary?” (-ll-)

xix. “Worry never robs tomorrow of its sorrow, but only saps today of its strength.” (Archibald Joseph Cronin)

xx. “On the morning of 16 December General Middleton’s VIII Corps had a formal corps reserve consisting of one armored combat command and four engineer combat battalions. In dire circumstances Middleton might count on three additional engineer combat battalions which, under First Army command, were engaged as the 1128th Engineer Group in direct support of the normal engineer operations on foot in the VIII Corps area. In exceptionally adverse circumstances, that is under conditions then so remote as to be hardly worth a thought, the VIII Corps would have a last combat residue-poorly armed and ill-trained for combat-made up of rear echelon headquarters, supply, and technical service troops, plus the increment of stragglers who might, in the course of battle, stray back from the front lines. General Middleton would be called upon to use all of these “reserves.” Their total effect in the fight to delay the German forces hammering through the VIII Corps center would be extremely important but at the same time generally incalculable, nor would many of these troops enter the pages of history.1

A handful of ordnance mechanics manning a Sherman tank fresh from the repair shop are seen at a bridge. By their mere presence they check an enemy column long enough for the bridge to be demolished. The tank and its crew disappear. They have affected the course of the Ardennes battle, even though minutely, but history does not record from whence they came or whither they went. A signal officer checking his wire along a byroad encounters a German column; he wheels his jeep and races back to alert a section of tank destroyers standing at a crossroad. Both he and the gunners are and remain anonymous. Yet the tank destroyers with a few shots rob the enemy of precious minutes, even hours. A platoon of engineers appears in one terse sentence of a German commander’s report. They have fought bravely, says the foe, and forced him to waste a couple of hours in deployment and maneuver. In this brief emergence from the fog of war the engineer platoon makes its bid for recognition in history. That is all. A small group of stragglers suddenly become tired of what seems to be eternally retreating. Miles back they ceased to be part of an organized combat formation, and recorded history, at that point, lost them. The sound of firing is heard for fifteen minutes, an hour, coming from a patch of woods, a tiny village, the opposite side of a hill. The enemy has been delayed; the enemy resumes the march westward. Weeks later a graves registration team uncovers mute evidence of a last-ditch stand at woods, village, or hill.” (Hugh Cole, The Ardennes: The Battle of the Bulge (US Military History of WW II), chapter XIV).

Montemayor’s youtube channel

Here’s the link. Not many videos, but quality over quantity. This is wonderful stuff.

Quotes

i. “You can never know everything, […] and part of what you know is always wrong. Perhaps even the most important part. A portion of wisdom lies in knowing that.” (Robert Jordan, Winter’s Heart: Book Nine of The Wheel of Time (p. 619))

ii. “It is the enemy you underestimate who kills you.” (Robert Jordan, A Crown of Swords: Book Seven of The Wheel of Time (p. 247))

iii. “When a woman plays the fool, look for the man.” (Robert Jordan, The Path of Daggers: Book Eight of The Wheel of Time (p. 75))

iv. “A secret spoken finds wings.” (Robert Jordan, The Path of Daggers: Book Eight of The Wheel of Time (p. 437))

v. “The worst mistake is to make the same one twice” (Robert Jordan, The Path of Daggers: Book Eight of ‘The Wheel of Time’ (p. 473)

vi. “A distributed system is one in which the failure of a computer you didn’t even know existed can render your own computer unusable.” (Leslie Lamport, as quoted in Sam Newman’s Building Microservices, 2nd edition (p. 17)).

vii. “Unfortunately, people have come to view the monolith as something to be avoided — as something inherently problematic. I’ve met multiple people for whom the term monolith is synonymous with legacy. This is a problem. A monolithic architecture is a choice, and a valid one at that. I’d go further and say that in my opinion it is the sensible default choice as an architectural style. In other words, I am looking for a reason to be convinced to use microservices, rather than looking for a reason not to use them.” (Sam Newman, Building Microservices, 2nd edition (p. 18))

viii. “The connections between modules are the assumptions which the modules make about each other.” (David Parnas, as quoted in Sam Newman’s Building Microservices, 2nd edition (p. 37)

ix. “…he had quickly learned one thing from being a king: the more authority you gained, the less control you had over your life. […] Being in control wasn’t so much about the power you had, but the power you implied that you had.” (Robert Jordan, The Gathering Storm: Book 12 of the Wheel of Time (pp. 104, 116))

x. “He wasn’t stupid; he just liked to think about things. But he’d never been good with people […]. Faile had shown him that he didn’t need to be good with people, or even with women, as long as he could make one person understand him. He didn’t have to be good at talking to anyone else as long as he could talk to her.” (Robert Jordan, The Gathering Storm: Book 12 of the Wheel of Time (p. 162))

xi. Sometimes […] we are so concerned with the things we have done that we do not stop to consider the things we have not.” (Robert Jordan, The Gathering Storm: Book 12 of the Wheel of Time (p. 245))

xii. “A general who draws his sword has put aside his baton and become a common soldier.” (Robert Jordan, Knife of Dreams: Book Eleven of ‘The Wheel of Time (p. 816))

xiii. “Always plan ahead, […] but worry too hard over next year, and you can trip over tomorrow.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 302))

xiv. “Change came so slowly you never noticed it creeping up on you, or far too fast for comfort, but it came. Nothing stayed the same, even when you thought it did. Or hoped it would.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 356))

xv. “…even oaths of fealty left room for the most loyal people doing the worst possible thing in the belief that it was in your best interest.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 364))

xvi. “…she had no more idea how to deal with being in love than a duck had about shearing sheep.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 394))

xvii. “…nothing at all had been decided except that more talk was necessary before anything could be decided.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 425))

xiii. “People never really changed, yet the world did, with disturbing regularity. You just had to live with it, or at least live through it. Now and then, with luck, you could affect the direction of the changes, but even if you stopped one, you only set another in motion.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 474))

xix. “Sometimes a man just had to live with it. Most of the time, since there was nothing else to do.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 500))

xx. ““When you go to buy a sack of flour,” she said, “wear plain wool so the seller thinks you can’t afford to pay any more than you must. When you’re after flour by the wagonload, wear jewels so she thinks you can afford to come back for all she can lay hands on.” […] Dress poor when you want a small favor, and fine when you want a large one.” (Robert Jordan, Crossroads of Twilight: Book Ten of ‘The Wheel of Time (p. 501))

Quotes

i. “Do what you must, then pay the price for it, was what she had been taught, by the same women who had marked off those forbidden areas. It was refusal to admit the debt, refusal to pay, that often turned necessity to evil.” (A Crown of Swords (p. 230), Robert Jordan)

ii. “Wounds to the pride are remembered long after wounds to the flesh.” (-ll-, p. 238)

iii. “…a general can take care of the living or weep for the dead, but he cannot do both.’” (The Shadow Rising (p. 686), Robert Jordan)

iv. “He strains to hear a whisper who refuses to hear a shout.” (The Fires Of Heaven (p. 264), Robert Jordan)

v. “I take people as they are, not as I would like them to be, or else I leave them.” (The Fires Of Heaven (p. 538), Robert Jordan).

vi. “Only a battle lost is sadder than a battle won.” (-ll- (p. 655))

vii. “An open sack hides nothing, and an open door hides little, but an open man is surely hiding something.” (-ll- (p. 730))

viii. “…hard times make people willing to accept a man who preaches change.” (Elantris (p. 106), Brandon Sanderson)

ix. “…As was often the case, the most outspoken man was the least discerning.” (-ll-, (p. 113))

x. “…it was beneficial to have a reputation for honesty, if only so that one could lie at crucial moments.” (-ll-, (pp. 114-115))

xi. “Perhaps he had been looking for that branching point in his life where he had stepped down the wrong path.

He hadn’t understood. There was rarely an obvious branching point in a person’s life. People changed slowly, over time. You didn’t take one step, then find yourself in a completely new location. You first took a little step off a path to avoid some rocks. For a while, you walked alongside the path, but then you wandered out a little way to step on softer soil. Then you stopped paying attention as you drifted farther and farther away. Finally, you found yourself in the wrong city, wondering why the signs on the roadway hadn’t led you better.” (The Emperor’s Soul (p. 71), Brandon Sanderson)

xii. “Every man had to die. He’d always found it odd that so many died when they were old, as logic said that was the point in their lives when they’d had the most practice not dying.” (Shadows of Self (pp. 79-80), Brandon Sanderson)

xiii. “The law is not something holy, son. It’s just a reflection of the ideals of those lucky enough to be in charge.” (Shadows of Self (p. 277), Brandon Sanderson)

xiv. ““There has to be a balance, Vin,” […] The balance between who we wish to be and who we need to be.” […] “But for now,” he said, nodding to the side, “we simply have to be satisfied with who we are.”” (The Hero of Ages (p. 155), Brandon Sanderson)

xv. ““A man is what he has passion about,” Breeze said. “I’ve found that if you give up what you want most for what you think you should want more, you’ll just end up miserable.” (-ll-, (p. 343))

xvi. “Beauty seen is never lost.” (John Greenleaf Whittier)

xvii. “Skills used unselfishly make for cooperation.” (Robert Holdstock)

xviii. “Every breath you take is a step towards death.” (Ali, Nahj al-Balagha, Sayings: 74)

xix. “‘Bogdan.’ Stephen finally looks up. ‘I’m not a fool. Well, no more than any of us. I miss things from time to time, people don’t quite make the sense they did.’ Bogdan nods. ‘My father, God rest him, lost himself towards the end. In those days they said he went doolally – probably that’s not what we say these days.’ ‘I don’t think we do,’ agrees Bogdan. ‘“Where’s your mother?” he would ask me sometimes.’ Stephen moves a piece on the board. A holding move, nothing risked, nothing gained. ‘Only, my mother had died, many years previously.’ Bogdan is looking down at the board now. Let Stephen talk. Only answer a question if one is asked. ‘So, you see,’ says Stephen, ‘why it might worry me that I don’t know where Elizabeth is today?’ […] ‘We all forget things, Stephen.’ Stephen smiles, and nods. ‘Very clever. But you would tell me? You would tell me if something was up? I can’t ask Elizabeth. I don’t want to worry her.’ Again, Stephen has asked Bogdan this question a number of times. And Bogdan always answers in the same way. ‘Would I tell you? Honestly, I don’t know. What would you do, if it was someone you loved?’ ‘I suppose if I felt it would help, then I would tell them,’ says Stephen. ‘And if I felt it wouldn’t help, then I wouldn’t tell them.’ Bogdan nods. ‘I like that. I think that is right.’ ‘But you think I’m all right? A bit of fuss over nothing?’ ‘That’s exactly what I think, Stephen,’ says Bogdan, and moves one of his pawns further up the board.” (…for context: Stephen is suffering from dementia. The way Osman handled these topics in this book made me cry, he understands these things better than any human should ever need to. Quote from The Bullet That Missed, Richard Osman, p. 255)

xx. “‘It’s the people, in the end, isn’t it?’ says Viktor. ‘It’s always the people. You can move halfway around the world to find your perfect life, move to Australia if you like, but it always comes down to the people you meet.’” (-ll-) (p. 407)

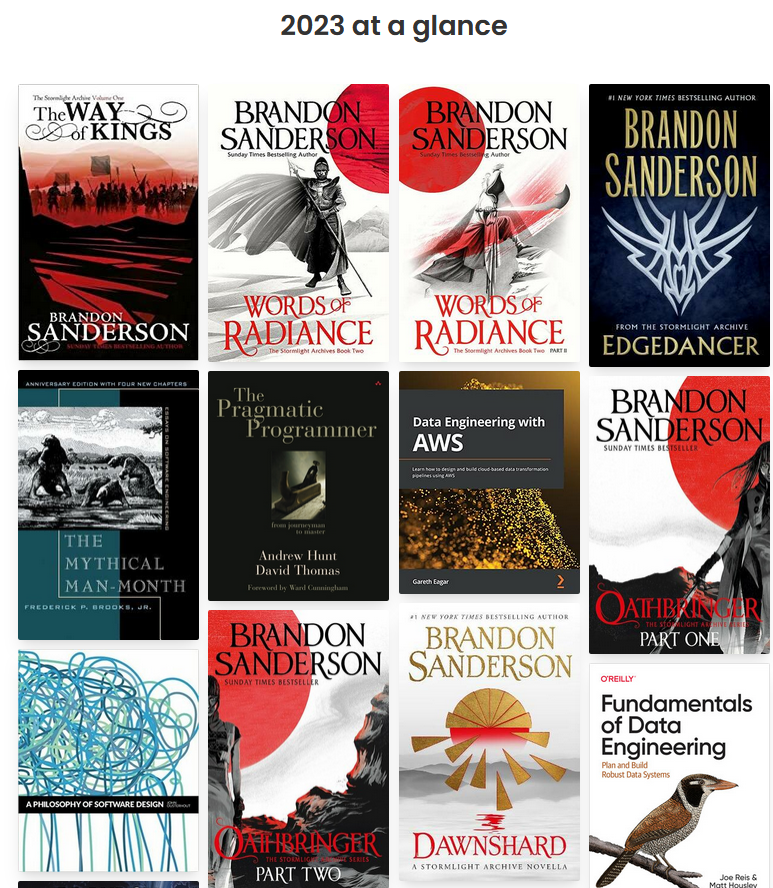

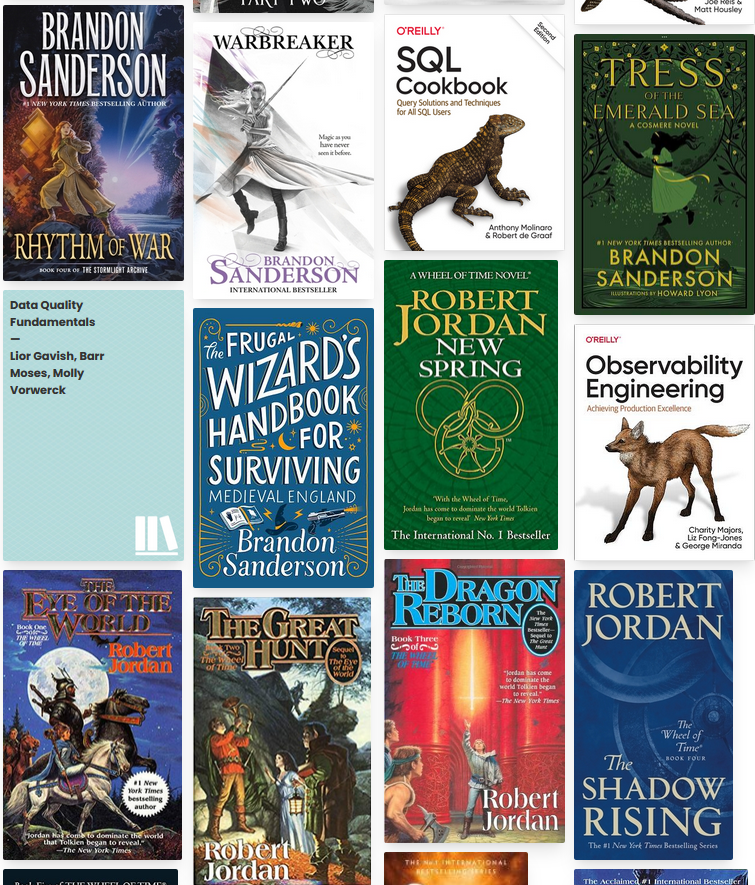

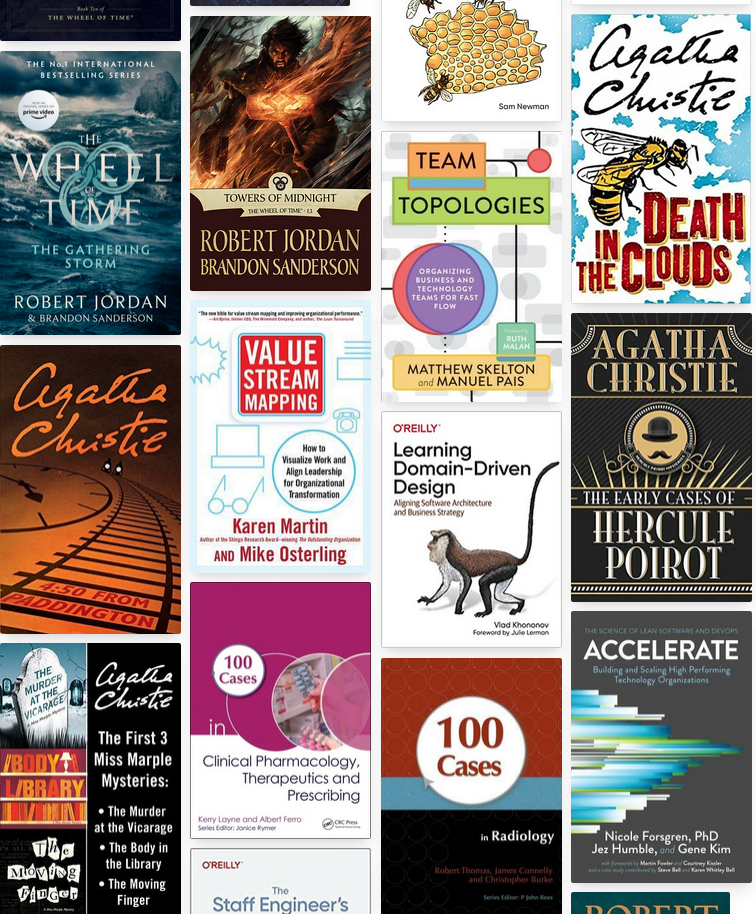

Books 2023

I try to make a post like this one every year.

Below I have included a list of the 57 books I’ve read and reviews I’ve shared throughout the year, as well as some comments and reflections.

The average page count of the books I read in 2023 was the highest ever for me – either 572 or 550 pages per book on average, depending on how you calculate it. Details are included below. So there are not many ‘easy reads’ featuring on this list. This is also the obvious reason for the low number of books featuring on the list.

I really like the visual overviews generated by book tracking resources like goodreads or TheStoryGraph (-TSG) so I decided to include screenshots of the overview auto-generated at the end of the year in this post. You can see the TSG view below. You can’t interact with the visual overview below the way you can with the overview on the site, but I’ve learned to not take for granted that these sites will necessarily be available/accessible/functional in the future and I know that the visual overview included in this post will be functional even if the source site which generated the views goes down later on, which is desirable from my perspective.

Below the visual overview I have added some more comments and details, and at the end of the post I also included the usual list in the format that has become the standard format for this blog, with author/publisher/rating information as well as various comments and links to reviews I’ve written about the books.

Here’s a link to the overview, I think it should be available to you even if you do not have a profile on TSG.

…

Okay, on to the details.

24 out of the 57 books, or 42%, were non-fiction books. Of the non-fiction books I read I would categorize 19 of them as relevant to my work to some extent, excluding from the non-fiction list only the three 100 Cases… medical textbooks, the Oxford Handbook of Pain Management, and The Fall of Rome. Some of the others turned out to be of limited relevance, but I read them because I didn’t know this at the point in time where I started reading them. I had a desire at the start of the year to read more non-fiction relevant to my work, and in that I succeeded. I take some pride in the fact that there are basically no ‘very short introductions’ included on the list; a substantial proportion of the non-fiction books I read had page counts north of 500 pages, which they would need to have for the non-fiction books not to drag down the average.

Whereas I read a little more than 100 pages per day in 2022, in 2023 I read somewhere between 85 and 90 pages per day, depending on which metric you use – TheStoryGraph (-TSG) logged 31.374 pages, which divided by 365 is ca. 86 pages/day; so although I only read roughly half as many books this year as I did last year, the number of pages I read was not really that different. Although TSG includes a lot of books, the page counts linked to the specific editions do not always match the page counts of the books you might be reading yourself. When I for the second time this year experienced that a highly technical 800+ pages textbook was logged at 500-something pages on TSG, I had had enough with their reported numbers and I started explicitly tracking on this myself, by adding comments to the book entries in the list if the page count of the version I read was substantially different from the one reported on TSG – I knew that the page count metric would be the only one I’d care about this year, so I wanted to get it right. So, if you adjust for page counts which were different in the versions I read and the versions included in TSG, then the total page count of the books I read during the year increases by 1248 pages, to 32.622 pages in total, or 89,4 pages/day.

The average page count of the books I read was 550 (31.374/57), or 572 (32.622/57), depending on which of the two metrics you prefer. The page count dropped somewhat at the end of the year; I know that I was above 600 pages for most of the year, and for example by mid-September the average page count of the books I had finished was 620 pages.

Not all pages are equal, though. There is also the question of word counts per page, which varies across books. According to the handy list on Wikipedia here, Robert Jordan’s Wheel of Time series clocks in at 4,3 million words (4.305.057) words, which at a standard format of 250-300 words/page (let’s say 275) would translate to 15.654 pages, or more than 1000 pages per book; although some of the books in the series I read had 1000+ pages, many of them did not, so they are presumably denser than what is standard for fiction reading.

To make a comparison between Jordan and something people might be familiar with, the 7 Harry Potter books has a total word count of ca. 1,1 million words (1.084.170) – so the 15 Wheel of Time books alone corresponds to roughly 28 HPbu’s (‘Harry Potter book units’). Although the Jordan books are at the extreme end of this spectrum I feel quite confident they’re not the only books in this type of category this year; Sanderson’s Stormlight Archive can be taken as another example, although it’s less pronounced as both Words of Radiance and Oathbringer were split into two books by the publisher – even so, the 6 (/8) published books in that series included in the list clocks in at 1.787.571 words according to the linked Wikipedia page, translating to 6.500 pages in standard 250-300 (275)-word-format pages; the wiki hardback word counts included in the wiki page are substantially lower than this and I’m fairly confident the versions I read were as well.

Note that the two series above alone make up 6+ million words, and account for only 23 of the 57 books I read throughout the year. I think it was a decent year in terms of the books I read, even if the ‘book count’ was far from impressive.

You can see the full list of books below.

…

1. The Way of Kings (The Stormlight Archive #1) (5, f. Brandon Sanderson).

2. Words of Radiance, Part 1 (The Stormlight Archive #2a) (5, f. Brandon Sanderson). Review here.

3. Words of Radiance, Part 2 (The Stormlight Archive #2b) (5, f. Brandon Sanderson). Short review here (…you’ll have to trust me on the fact that the puns I included in the review make a lot more sense after you’ve read the book…).

4. The Mythical Man-Month: Essays on Software Engineering, Anniversary Edition (3.0, nf. Frederick P. Brooks Jr., Addison-Wesley Professional). Work-related.

5. Edgedancer (The Stormlight Archive #2.5) (5, f. Brandon Sanderson). Review here.

6. The Pragmatic Programmer: From Journeyman to Master (3, nf. Hunt & Thomas, Pearson Education, Inc.). Work-related. Blog post here.

7. Data Engineering with AWS (2.5, nf. Gareth Eagar, Packt Publishing). Work-related. Short review here. Note that the page count given in TheStoryGraph link does not match my version of the book; my version has 662 pages, rather than the 482 pages indicated in the linked book profile page).

8. Oathbringer Part One (The Stormlight Archive #3a) (4.5, f. Brandon Sanderson).

9. A Philosophy of Software Design (4.5, nf. John Ousterhout, Yaknyam Press). Work-related, I shared many insights from this book with other members of my team, it’s full of good stuff. As I put it in my very short review, “An excellent little book, my main criticism would be that it’s too short.”

10. Oathbringer: Part Two (The Stormlight Archive #3b) (4.75, f. Brandon Sanderson).

11. Dawnshard (The Stormlight Archive #3.5) (4.25, f. Brandon Sanderson). Short review here.

12. Fundamentals of Data Engineering: Plan and Build Robust Data Systems (5, nf. Housley & Reis, O’Reilly Media). Work-related, an excellent book.

13. Rhythm of War (The Stormlight Archive #4). (5, f. Brandon Sanderson). Short review here. Like some of the other books in the series, this book is just ridiculously long – but that should not stop you from reading it.

14. Warbreaker (3.75, f. Brandon Sanderson).

15. SQL Cookbook: Query Solutions and Techniques for All SQL Users (2.5, nf. de Graaf & Molinaro, O’Reilly Media). Work-related. Long-ish review here. Note that the page count in TheStoryGraph does not match my version of the book; my version has 814 pages, rather than the 572 pages indicated in the linked book profile page. As I also mention in my review, this book did not need to include 814 pages in order to cover the material it does; this is one of the reasons why the rating is not higher than it is.

16. Tress of the Emerald Sea (Secret Projects #1) (4.0, f. Brandon Sanderson). Very short review here.

17. Data Quality Fundamentals (3.75, nf. Gavish, Moses & Vorwerck, O’Reilly Media). Work-related.

18. The Frugal Wizard’s Handbook for Surviving Medieval England (Secret Projects #2) (3.25, f. Brandon Sanderson).

19. New Spring (The Wheel of Time #0) (4.5, f. Robert Jordan). Short review here.

20. Observability Engineering (nf. Majors, Fong-Jones & Miranda, O’Reilly Media). Review here.

21. The Eye of the World (The Wheel of Time #1) (4.5, f. Robert Jordan). Very short review here.

22. The Great Hunt (The Wheel of Time #2) (5, f. Robert Jordan).

23. The Dragon Reborn (The Wheel of Time #3) (4.5, f. Robert Jordan).

24. The Shadow Rising (The Wheel of Time #4) (5.0, f., Robert Jordan). Short review here.

25. The Fires of Heaven (The Wheel of Time #5) (4.25, f. Robert Jordan).

26. Data Engineering with Python (2.25, nf. Paul Crickard, Packt Publishing). A somewhat disappointing read. The page count reported on TheStoryGraph was off by ca. 100 pages; my version had 464 pages, rather than the 356 pages logged on TSG. Despite the fact that the book was not all that great it did lead to a conversation with a colleague about Apache Airflow which eventually lead to us starting to use Airflow.

27. Lord of Chaos (The Wheel of Time #6) (4.5, f. Robert Jordan)

28. A Crown of Swords (The Wheel of Time #7) (4.25, f. Robert Jordan)

29. The Path of Daggers (The Wheel of Time #8) (4.25, f. Robert Jordan)

30. Das Boot (5.0, f. Lothar-Günther Buchheim). There was no way to justify not giving this book 5 stars, but as I pointed out in the review I wrote on Thestorygraph, “This book is definitely not for everybody”.

31. Data Mesh: Delivering Data-Driven Value at Scale (5, nf. Zhamak Dehghani, O’Reilly Media). Work-related, very much worth reading; I recommended this book to several of my work colleagues.

32. Winter’s Heart (The Wheel of Time #9) (4.25, f. Robert Jordan)

33. Crossroads of Twilight (The Wheel of Time #10) (4.5, f. Robert Jordan)

34. Knife of Dreams (The Wheel of Time #11) (4.5, f. Robert Jordan)

35. Kafka: The Definitive Guide: Real-Time Data and Stream Processing at Scale (nf. Shapira, Palino & Narkhede, O’Reilly Media). The page count reported on TheStoryGraph was off by more than 300 pages – their 2nd edition was logged at 488 pages, whereas the version I read had 817 pages. This book takes a significant amount of work to read cover to cover. My org.’s at the time of writing in the process of implementing Kafka (this was why I read the book), I felt it was premature to rate the book for this reason.

36. Building Event-Driven Microservices: Leveraging Organizational Data at Scale (3.0, nf. Adam Bellemare, O’Reilly Media). TheStoryGraph’s version of the book underestimated the book’s page count by ca. 60 pages; their version was logged at 324 page whereas the version I read contained 385 pages.

37. The Gathering Storm (The Wheel of Time #12) (4.75, f. Robert Jordan)

38. Towers of Midnight (The Wheel of Time #13) (4.5, f. Robert Jordan)

39. Building Microservices: Designing Fine-Grained Systems (4.5, nf. Sam Newman, O’Reilly Media). Review here.

40. Death in the Clouds (3.0, Agatha Christie, Poirot #12). Short review here.

41. 4:50 from Paddington (Agatha Christie, Miss Marple #8). Short review here.

42. Value Stream Mapping (2.0, nf. Osterling & Martin, McGraw Hill). Very short review here.

43. Team Topologies: Organizing Business and Technology Teams for Fast Flow (4.75, nf. Pais & Skelton, IT Revolution Press). I decided after having read this book to set up knowledge sharing sessions covering material from the book with both my team colleagues and with a senior director. I got positive feedback from both parties. From my review of the book: “This is an important book you’ll probably want to read.”

44. The Early Cases of Hercule Poirot (2.0, f. Agatha Christie)

45. Miss Marple 3-Book Collection 1: The Murder at the Vicarage, The Body in the Library, The Moving Finger (2,5, f. Review here). The page count reported on TheStoryGraph was off by more than 100 pages – on TSG this one was set to 667 pages, but my Kindle version of the book(s) had 804 pages.

46. 100 Cases in Clinical Pharmacology, Therapeutics and Prescribing: 100 Cases (3.5, nf. Layne & Ferro, CRC Press). Very short review here.

47. Learning Domain-Driven Design: Aligning Software Architecture and Business Strategy (3, nf. Vladik Khononov, O’Reilly Media. A slightly disappointing read, short review here). The page count reported on TheStoryGraph was off by almost 200 pages; on TSG the page count was set at 340 pages, but my Kindle version included 531 pages.

48. Accelerate: Building and Scaling High Performing Technology Organizations (2, nf. Humble, Kim & Forsgren, IT Revolution Press). Short review here.

49. 100 Cases in Dermatology (2.75, nf. Morris-Jones, Powell & Benton, CRC Press). Review here.

50. A Memory of Light (The Wheel of Time #14) (5, f. Robert Jordan). Review here.

51. The Staff Engineer’s Path: A Guide For Individual Contributors Navigating Growth and Change (2.5, nf. Tanya Reilly, O’Reilly Media).

52. 100 Cases in Radiology (3.5, nf. Thomas, Burke & Connelly, CRC Press).

53. The Last Devil to Die – A Thursday Murder Club Mystery #4 (5, f. Richard Osman). Short review here.

54. Oxford Handbook of Pain Management (3.25, nf. Connell, Brook & Pickering (editors), Oxford University Press).

55. The Enterprise Data Catalog (4.5, nf. Olesen-Bagneux, Ole, O’Reilly Media).

56. Yumi and the Nightmare Painter (Secret Projects #3) (4.5, f. Brandon Sanderson). Review here.

57. The Fall of Rome: And the End of Civilization (5, nf. Bryan Ward-Perkins, Oxford University Press). Review here.

The Pragmatic Programmer

I recently read this book by David Thomas & Andrew Hunt. It’s quite decent.

Some sample quotes from the book below:

…

“Programming is a craft. At its simplest, it comes down to getting a computer to do what you want it to do (or what your user wants it to do). As a programmer, you are part listener, part advisor, part interpreter, and part dictator. You try to capture elusive requirements and find a way of expressing them so that a mere machine can do them justice. You try to document your work so that others can understand it, and you try to engineer your work so that others can build on it. What’s more, you try to do all this against the relentless ticking of the project clock. You work small miracles every day.

It’s a difficult job.

There are many people offering you help. Tool vendors tout the miracles their products perform. Methodology gurus promise that their techniques guarantee results. Everyone claims that their programming language is the best, and every operating system is the answer to all conceivable ills.

Of course, none of this is true. There are no easy answers. There is no best solution, be it a tool, a language, or an operating system. There can only be systems that are more appropriate in a particular set of circumstances.

This is where pragmatism comes in. You shouldn’t be wedded to any particular technology, but have a broad enough background and experience base to allow you to choose good solutions in particular situations. […] You adjust your approach to suit the current circumstances and environment. You judge the relative importance of all the factors affecting a project and use your experience to produce appropriate solutions.”

“One of the cornerstones of the pragmatic philosophy is the idea of taking responsibility for yourself and your actions in terms of your career advancement, your learning and education, your project, and your day-to-day work. Pragmatic Programmers take charge of their own career, and aren’t afraid to admit ignorance or error. It’s not the most pleasant aspect of programming, to be sure, but it will happen — even on the best of projects. Despite thorough testing, good documentation, and solid automation, things go wrong. Deliveries are late. Unforeseen technical problems come up. These things happen, and we try to deal with them as professionally as we can. This means being honest and direct. We can be proud of our abilities, but we must own up to our shortcomings — our ignorance and our mistakes.”

“Above all, your team needs to be able to trust and rely on you — and you need to be comfortable relying on each of them as well. Trust in a team is absolutely essential for creativity and collaboration according to the research literature.[4] In a healthy environment based in trust, you can safely speak your mind, present your ideas, and rely on your team members who can in turn rely on you. Without trust, well…”

“In addition to doing your own personal best, you must analyze the situation for risks that are beyond your control. You have the right not to take on a responsibility for an impossible situation, or one in which the risks are too great […] When you do accept the responsibility for an outcome, you should expect to be held accountable for it. When you make a mistake (as we all do) or an error in judgment, admit it honestly and try to offer options. Don’t blame someone or something else, or make up an excuse. […] Instead of excuses, provide options. Don’t say it can’t be done; explain what can be done to salvage the situation.”

“The phrase “good enough’’ does not imply sloppy or poorly produced code. All systems must meet their users’ requirements to be successful […]. We are simply advocating that users be given an opportunity to participate in the process of deciding when what you’ve produced is good enough for their needs. […] The scope and quality of the system you produce should be discussed as part of that system’s requirements. […] Often you’ll be in situations where trade-offs are involved. Surprisingly, many users would rather use software with some rough edges today than wait a year for the shiny, bells-and-whistles version […] Great software today is often preferable to the fantasy of perfect software tomorrow. If you give your users something to play with early, their feedback will often lead you to a better eventual solution”.

“A good idea is an orphan without effective communication.”

“Build Documentation In, Don’t Bolt It On”.

“DRY Is More Than Code

Let’s get something out of the way up-front. In the first edition of this book we did a poor job of explaining just what we meant by Don’t Repeat Yourself [DRY]. Many people took it to refer to code only: they thought that DRY means “don’t copy-and-paste lines of source.” That is part of DRY, but it’s a tiny and fairly trivial part. DRY is about the duplication of knowledge, of intent. It’s about expressing the same thing in two different places, possibly in two totally different ways.

Here’s the acid test: when some single facet of the code has to change, do you find yourself making that change in multiple places, and in multiple different formats? Do you have to change code and documentation, or a database schema and a structure that holds it, or…? If so, your code isn’t DRY.”

“When we perform maintenance, we have to find and change the representations of things — those capsules of knowledge embedded in the application. The problem is that it’s easy to duplicate knowledge in the specifications, processes, and programs that we develop, and when we do so, we invite a maintenance nightmare […] Perhaps the hardest type of duplication to detect and handle occurs between different developers on a project. Entire sets of functionality may be inadvertently duplicated, and that duplication could go undetected for years, leading to maintenance problems.”

“At a high level, deal with the problem by building a strong, tight-knit team with good communications.

However, at the module level, the problem is more insidious. Commonly needed functionality or data that doesn’t fall into an obvious area of responsibility can get implemented many times over.

We feel that the best way to deal with this is to encourage active and frequent communication between developers.”

“What you’re trying to do is foster an environment where it’s easier to find and reuse existing stuff than to write it yourself. If it isn’t easy, people won’t do it. And if you fail to reuse, you risk duplicating knowledge.”

“Prototypes are designed to answer just a few questions, so they are much cheaper and faster to develop than applications that go into production. The code can ignore unimportant details—unimportant to you at the moment, but probably very important to the user later on. If you are prototyping a UI, for instance, you can get away with incorrect results or data.”

“What sorts of things might you choose to investigate with a prototype? Anything that carries risk. Anything that hasn’t been tried before, or that is absolutely critical to the final system. Anything unproven, experimental, or doubtful. Anything you aren’t comfortable with. You can prototype:

*Architecture

*New functionality in an existing system

*Structure or contents of external data

*Third-party tools or components

*Performance issues

*User interface design

Prototyping is a learning experience. Its value lies not in the code produced, but in the lessons learned. That’s really the point of prototyping.”

“One of the interesting things about estimating is that the units you use make a difference in the interpretation of the result. If you say that something will take about 130 working days, then people will be expecting it to come in pretty close. However, if you say “Oh, about six months,” then they know to look for it any time between five and seven months from now. […] The same concepts apply to estimates of any quantity: choose the units of your answer to reflect the accuracy you intend to convey.”

“Every time you find yourself doing something repetitive, get into the habit of thinking “there must be a better way.” Then find it.”

“All software becomes legacy software as soon as it’s written.”

“All errors give you information. You could convince yourself that the error can’t happen, and choose to ignore it. Instead, Pragmatic Programmers tell themselves that if there is an error, something very, very bad has happened. Don’t forget to Read the Damn Error Message”

“One of the benefits of detecting problems as soon as you can is that you can crash earlier, and crashing is often the best thing you can do. The alternative may be to continue, writing corrupted data to some vital database or commanding the washing machine into its twentieth consecutive spin cycle.”

“…the basic principle stays the same — when your code discovers that something that was supposed to be impossible just happened, your program is no longer viable. Anything it does from this point forward becomes suspect, so terminate it as soon as possible. A dead program normally does a lot less damage than a crippled one.”

“How to Program by Coincidence

Suppose Fred is given a programming assignment. Fred types in some code, tries it, and it seems to work. Fred types in some more code, tries it, and it still seems to work. After several weeks of coding this way, the program suddenly stops working, and after hours of trying to fix it, he still doesn’t know why. Fred may well spend a significant amount of time chasing this piece of code around without ever being able to fix it. No matter what he does, it just doesn’t ever seem to work right.

Fred doesn’t know why the code is failing because he didn’t know why it worked in the first place. It seemed to work, given the limited “testing’’ that Fred did, but that was just a coincidence.”

“Dont Program by Coincidence […] If you’re not sure why it works, you won’t know why it fails. […] Developers who don’t actively think about their code are programming by coincidence — the code might work, but there’s no particular reason why. […] Coding is not mechanical. … There are decisions to be made every minute — decisions that require careful thought and judgment if the resulting program is to enjoy a long, accurate, and productive life.”

Recreational math, a few videos

You can check out Neller & Presser’s 2004 paper which Ben refers to in the video here.

I seem to recall the box game above from when I was a child, and I think I actually tentatively reached a similar conclusion as Bradlow did in the video, namely that one of the example combinations displayed on the box did not seem to be possible to reach through any legal sequence of box manipulations/moves. One of my uncles used to be a math professor and I think we actually discussed this game at one point, but if we did it’s many years ago so details are very hazy – Bradlow’s line of reasoning sounds familiar but that may just be due to the fact that a ‘parity argument’ of the type he introduces in the video seems to often be useful in the context of implicit game theory existence proofs like these, even though we did not in fact go into such details back then.

Either way Bradlow did the math and if you encounter someone who claims he managed to solve the example discussed in the video you’ll know two things: 1. S/he cheated, and 2. s/he most likely did not do the math.

The cat and mouse game is a great illustration/example of how combining different strategies may sometimes lead to the solution of a problem that might not be solveable by any single strategy in the choice set on its own; considering combinations of different strategies will always increase the choice set, and/but sometimes it’ll do so in surprisingly unintuitive yet helpful ways.

Books 2022

I try to make a list like this every year.

Goodreads generated a view of some of the books with cover images here. I only used Goodreads for tracking my reading for part of the year, as the site stopped working for me – I became unable to add new books because of a bug, and I learned that support for such issues is non-existent; you get what you pay for, and they provided me no option to actually pay for getting this fixed so I left the site. I have switched to The StoryGraph for keeping track of the books I read, and they handle stats like these somewhat differently and they don’t generate the same kind of list. I think you also need an account on the site in order to see stats like these, I’m asked for a login when I try to access a link to my stats page for 2022 in incognito mode, so I didn’t think it made sense to share that link; however I have shared a relevant screen shot from the stats page below. The Storygraph’s rating scale is more fine-grained than that of Goodreads, but the scale is roughly similar.

The primary variable that came into focus this year for me was the page count, not the book count, because I realized in October that this might be the year where I actually had a realistic chance to manage to read 100 book pages per day on average over the course of the year, something I have never managed to do before. This was also the reason for the high number of pages read in November in particular (below). I made it with a few days to spare. The stats page on Goodreads logs the page count for the year at 13.749 pages, which is incorrect because three books had missing page counts from the book profile pages and so their page counts are not included in the total. Wolverton’s Nuclear Weapons book did not have a page count on Goodreads but my Kindle version has 268 pages, which should be added to the total, as should the 240 missing pages from The Ritual Animal: Imitation and Cohesion in the Evolution of Social Complexity and the 150 missing pages from the publication Cybersecurity. For The Storygraph I have 23.404 pages logged, distributed in this manner throughout the year:

That number is however too high, as it includes 4 books I do not want to include in the count because they are Dilbert books; those 4 publications make up in total 704 pages which should be subtracted as well. There is zero overlap between the two sites – I was recorded as being in the middle of reading multiple books on Goodreads when the site stopped working, but this is irrelevant for the stats as I know that logged pages linked to books not finished are not included in the Goodreads total (they are however included in the Storygraph stats); this is something I have been annoyed about in the past. So altogether this makes the total page count equal to 13.749 + 268 + 240 + 150 + 23.404 – 704 = 37.107 pages, which is slightly more than the 36.500 cut-off which would be required in order to have reached the goal of 100 pages per day.

Until October, when I was realizing the 100-pages-per-day goal might be achievable, I don’t think I actually cared all that much about trying to find the exact edition of the specific book I was reading in contexts where many editions exist, and so in some contexts I’d assume specific page counts of the books I actually read might have been different from the page counts logged; I am aware of at least one major mismatch: Wiegers & Beatty’s Software Requirements 3 was logged as 672 pages on The Storygraph, however my own Kindle version of the book actually has 1248 pages. However I’m assuming such measurement errors should average out, so I have not added those specific ‘missing pages’ because they might be offset by other errors in the other direction elsewhere which I have not taken note of.

As for the number of books completed, the number for the year is 35 (Goodreads) + 78 (The Storygraph) – 4 (Dilbert) = 109. No unfinished books are included in that number, although I also read the first few hundred pages of the book Data Engineering with AWS during the year (…pages which, again, were not included in the total because Goodreads disregards pages linked to unfinished books), but I did not manage to finish that one due to the fact that work was taking up too much of the ‘mental surplus’ required to actually read and understand a book like that at the time, and I just never got back to it – I might do that this year. I also started reading Sanderson’s The Way of Kings at the end of the year and that book is also not featuring on the list despite contributing a few pages to the total page count.

Most of the books I read last year were fiction books, but some of the non-fiction books I read throughout the year are not easy reads and I’m reasonably satisfied with the amount of non-fiction reading I did throughout the year, although I didn’t read ‘enough’ of it towards the end of the year in particular, because (good) non-fiction takes longer to read than fiction and I sort of really wanted to hit that page count goal. In terms of the categories I have used, 20 books were non-fiction, 6 were miscellaneous (these tend to be categorized as non-fiction elsewhere, but I reserve that category for textbooks and similar – if they were to be coded as non-fiction as well then I completed one non-fiction book every second week on average over the course of the year), and 83 fiction books.

The average page count of the books I read throughout the year was ~340 pages (37.107/109), which is rather high and one of the reasons why I wasn’t really all that focused on a book count endgoal this year.

…

In the list below the numbers in the parentheses are the ratings I gave the books, and the letters following the ratings code for the book category (f = fiction, nf = non-fiction, m = miscellaneous).

Below is the list of books I read in 2022:

1 All Clear (5, f). Connie Willis. Short Goodreads review here (quote from the review: “This is one of the best books I’ve ever read”).

2. Fire Watch (2, f). Connie Willis.

3. Lincoln’s Dreams (3, f). Connie Willis. Goodreads review here.

4. Microbiology, A Very Short Introduction (4, nf. Oxford University Press)

5. The Name of the Wind (5, f). Patrick Rothfuss. My very short Goodreads review simply states: “This is an amazing book.” Recommended.

6. The Wise Man’s Fear (5, f). Patrick Rothfuss.

7. The Slow Regard of Silent Things (5, f). Patrick Rothfuss. Goodreads review here.

8. Furies of Calderon (4, f). Jim Butcher.

9. Adaptation and Natural Selection (3, nf. Princeton University Press).

10. Academ’s Fury (3, f). Jim Butcher.

11. Cursor’s Fury (3, f). Jim Butcher.

12. Captain’s Fury (3, f). Jim Butcher.

13. Princeps’ Fury (2, f). Jim Butcher.

14. The Missing Readme: A Guide for the New Software Engineer (4, nf. No Starch Press). Work-related.

15. The Great Troll War (3, f). Jasper Fforde. Short Goodreads review here.

16. First Lord’s Fury (2, f). Jim Butcher.

17. Introduction to PLCs (2, nf. Carolina Academic Press). Short Goodreads review here.

18. The Metamorphosis (1, f). Franz Kafka. My very short Goodreads review simply stated: “Boring and implausible.”

19. The Thursday Murder Club (4, f). Richard Osman.

20. The Man Who Died Twice (5, f). Richard Osman.

21. 97 Things Every Data Engineer Should Know (2, nf. O’Reilly Media). Work-related. Goodreads review here.

22. Magpie Murders (4, f). Anthony Horowitz.

23. Moonflower Murders (3, f). Anthony Horowitz.

24. The Word Is Murder (5, f). Anthony Horowitz. Short Goodreads review here.

25. The Sentence is Death (4, f). Anthony Horowitz. Very short goodreads review here.

26. A Line To Kill (4, f). Anthony Horowitz. Very short goodreads review here.

27. The House of Silk (2, f). Anthony Horowitz.

28. Moriarty (4, f). Anthony Horowitz.

29. Nuclear Weapons (4, nf. Princeton University Press). Very short Goodreads review here.

30. The Goblin Emperor (5, f). Very short Goodreads review here.

31. The Ritual Animal: Imitation and Cohesion in the Evolution of Social Complexity (2, nf. Oxford University Press). A mixed bag, as I wrote in my Goodreads review here.

32. Supernova (4, nf. Princeton University Press). In my Goodreads review I called the book ‘A nice little primer on this topic’.

33. The Witness for the Dead (3, f). Goodreads review here.

34. Cybersecurity (2, nf. Princeton University Press). Not really coverage at a level deep enough for this to be particularly useful in my position, but I did grab it in part for work-related reasons (wanting to know if this might be an easy primer it would make sense to recommend to some colleagues at work).

35. The Angel of the Crows (2, f). Katherine Addison. Short Goodreads review here.

36. The Grief of Stones (2,25, f). Katherine Addison.

37. What Patients Say, What Doctors Hear (1,5, nf. Beacon Press)

38. The Man With a Load of Mischief (2, f). Martha Grimes.

39. Death Of An Old Goat (4,5, f). Robert Barnard. Short review here.

40. A Little Local Murder (4,25, f). Robert Barnard.

41. Death on the High C’s (2,25, f). Robert Barnard. Short review here.

42. The History of Time: A Very Short Introduction (1,25, nf. Oxford University Press). Short review here.

43. Blood Brotherhood (2, f). Robert Barnard.

44. Unruly Son (4, f). Robert Barnard.

45. Posthumous Papers (2,25, f). Robert Barnard.

46. Death In A Cold Climate (3,5, f). Robert Barnard. Review here.

47. Mother’s Boys (4,25, f). Robert Barnard. Very short review here.

48. Little Victims (4, f). Robert Barnard.

49. Out of the Blackout (2, f). Robert Barnard.

50. Corpse In A Gilded Cage (4,5, f). Robert Barnard. Very short review here.

51. The Disposal of the Living (2, f). Robert Barnard. Short review here.

52. Political Suicide (4,25, f). Robert Barnard.

53. The Skeleton in the Grass (3,5, f). Robert Barnard.

54. At Death’s Door (f). Robert Barnard.

55. A City Of Strangers (0,0, f). Robert Barnard. Review here.

56. Designing Data-Intensive Applications (5, nf. O’Reilly Media). I would probably have added this book to my list of favorite books on Goodreads if I had still been using Goodreads at that point in time. This book is loong (1052 pages), but it’s full of good stuff. I have been sharing information from this book with colleagues in my team. The book is a big part of the reason why the 10+ books above it on this list were all fiction books – it wasn’t that I wasn’t reading any non-fiction during that period, but rather that this book takes a lot of time to read and understand.

57. The Masters of the House (3, f). Robert Barnard.

58. Death and the Chaste Apprentice (2,25, f). Robert Barnard.

59. A Fatal Attachment (2, f). Robert Barnard.

60. A Hovering Of Vultures (1,5, f). Robert Barnard.

61. Unholy Dying (2, f). Robert Barnard.

62. Mort (5, f). Terry Pratchett.

63. The Law (4, f). Jim Butcher.

64. Touched by the Dead (1,75, f). Robert Barnard.

65. With the Old Breed: At Peleliu and Okinawa (5, nf. Oxford University Press). Eugene Sledge’s book is very much worth reading if you’re interested in WW2 war memoirs.

66. Getting to Yes: Negotiating Agreement Without Giving In (3,5, nf. Penguin Group). Review here.

67. Software Requirements 3 (5, nf. Microsoft Press). Review here. Work-related. I would have added this book to my list of favorite books on Goodreads if I had still been using Goodreads. This was the only book I blogged last year, blogpost here.

68. What If? 2: Additional Serious Scientific Answers to Absurd Hypothetical Questions (5, m.). Very short review here.

69. A Confederacy of Dunces (2,25, f). John Kennedy Toole. Review here.

70. Troy (3, m.). Stephen Fry. Review here.

71. How to: Absurd Scientific Advice for Common Real-World Problems (4, m.). Randall Munroe.

72. The Bullet That Missed (4,5, f). Richard Osman.

73. Still Life (3,5, f). Louise Penny.

74. Biofabrication (2,75, nf. MIT Press).

75. A Fatal Grace (2,25, f). Louise Penny.

76. The Cruelest Month (1,25, f). Louise Penny.

77. Hunting: A Cultural History (1, nf. MIT Press). Review here.

78. How to Take Over the World: Practical Schemes and Scientific Solutions for the Aspiring Supervillain (3,5, m.). This book is quite funny and I recommended it to a colleague who happens to be a Marvel fan.

79. Humble Pi: A Comedy of Maths Errors (5, m.). Matt Parker. Very short review here.

80. Neurolinguistics (4,5, nf. MIT Press). I think I recommended this one to one of my brothers and one or two colleagues, it’s a very nice little book.

81. Death and Croissants (4,5, f). Ian Moore.

82. Death and Fromage (4,5, f). Ian Moore. Very short review here.

83. Death and Papa Noël (3, f). Ian Moore.

84. Forever and a Day (2, f). Anthony Horowitz. Short review here.

85. With a Mind to Kill (2, f). Anthony Horowitz. Short review here.

86. Trigger Mortis (2, f). Anthony Horowitz.

87. Casino Royale (2, f). Ian Fleming.

88. Live and Let Die (2, f). Ian Fleming.

89. Moonraker (2, f). Ian Fleming.

90. Diamonds Are Forever (2,25, f). Ian Fleming.

91. From Russia With Love (2, f). Ian Fleming.

92. Dr No (2,5, f). Ian Fleming.

93. Rethinking Human Evolution (3, nf. MIT Press). Review here.

94. Zero Minus Ten (1,75, f). Raymond Benson.

95. The Facts of Death (2, f). Raymond Benson. Very short review here.

96. The Final Empire (5, f). Brandon Sanderson. Very short review here.

97. The Well of Ascension (5, f). Brandon Sanderson. Review here.

98. Code That Fits in Your Head: Heuristics for Software Engineering (3, nf, Addison-Wesley Professional). Work-related, I shared insights from this book with some of my colleagues.

99. The Hero of Ages (4, f). Brandon Sanderson.

100. The Alloy of Law (5, f). Brandon Sanderson. Very short review here.

101. Shadows of Self: A Mistborn Novel (5, f). Brandon Sanderson. Very short review here.

102. The Bands of Mourning (5, f). Brandon Sanderson.

103. Mistborn: Secret History (2,25, f). Brandon Sanderson. Short review here.

104. The Lost Metal (4,5, f). Brandon Sanderson.

105. Elantris (5, f). Brandon Sanderson. Short review here.

106. The Emperor’s Soul (4, f). Brandon Sanderson. Review here.

107. High Time to Kill (1, f). Raymond Benson.

108. Intellectual Property Strategy (1,5, nf. MIT Press). Review here.

109. The Journal of Nicholas Cresswell, 1774-1777 (m.). Review here.

Software requirements

I’m currently reading this book. Some learnings and quotes from the first third of it below (I’m slightly confused if the linked version is exactly the same version as the one I’m reading, as the Kindle version I’m reading includes 1252 pages and the page count of the above book is approximately half of that, but that may just be a Kindle thing). I really like the book, it includes a lot of good stuff, though the amount of coverage dedicated to implementing good requirements practices in an agile setting leaves a little to be desired, at least so far.

…

“Many problems in the software world arise from shortcomings in the ways that people learn about, document, agree upon and modify the product’s requirements. […] common problem areas are informal information gathering, implied functionality, miscommunicated assumptions, poorly specified requirements, and a casual change process.

Various studies suggest that errors introduced during requirements activities account for 40 to 50 percent of all defects found in a software product […]. Inadequate user input and shortcomings in specifying and managing customer requirements are major contributors to unsuccessful projects. Despite this evidence, many organizations still practice ineffective requirements methods. Nowhere more than in the requirements do the interests of all the stakeholders in a project intersect. […] stakeholders include customers, users, business analysts, developers, and many others. Handled well, this intersection can lead to delighted customers and fulfilled developers. Handled poorly, it’s the source of misunderstanding and friction that undermine the product’s quality and business value.”

“The goal of requirements development is to accumulate a set of requirements that are good enough to allow your team to proceed with design and construction of the next portion of the product at an acceptable level of risk. You need to devote enough attention to requirements to minimize the risks of rework, unacceptable products, and blown schedules.”

“You will never get perfect requirements. […] The amount of detail, the kinds of information you provide, and the way you organize it should all be intended to meet the needs of your audiences. […] On most projects it’s neither realistic nor necessary to pin down every requirement detail early in the project. Instead, think in terms of layers. You need to learn just enough about the requirements to be able to roughly prioritize them and allocate them to forthcoming releases or iterations. Then you can detail groups of requirements in a just-in-time fashion to give developers enough information so they can avoid excessive and unnecessary rework.”

“Reaching agreement on external and internal system interfaces has been identified as a software industry best practice […]. A complex system with multiple subcomponents should create a separate interface specification or system architecture specification. […] A change in an interface demands communication with the person, group, or system on the other side of that interface.”

There are many types of requirements:

“Software requirements include three distinct levels: business requirements, user requirements, and functional requirements. In addition, every system has an assortment of nonfunctional requirements. […] What you call each deliverable is less important than having your organization agree on their names, what kinds of information go into each, and how that information is organized.”

Business requirements describe why the organization is implementing the system — the business benefits the organization hopes to achieve.

User requirements describe goals or tasks the users must be able to perform with the product that will provide value to someone. […] [they] describe what the user will be able to do with the system. An example of a use case is “Check in for a flight” using an airline’s website.

Functional requirements specify the behaviors the product will exhibit under specific conditions. They describe what the developers must implement to enable users to accomplish their tasks (user requirements), thereby satisfying the business requirements. [They] often are written in the form of the traditional “shall” statements: “The Passenger shall be able to print boarding passes for all flight segments for which he has checked in”.

System requirements describe the requirements for a product that is composed of multiple components or subsystems.

A good example of a “system” is the cashier’s workstation in a supermarket. There’s a bar code scanner integrated with a scale, as well as a hand-held bar code scanner. The cashier has a keyboard, a display, and a cash drawer. […] These hardware devices are all interacting under software control. The requirements for the system or product as a whole, then, lead the business analyst to derive specific functionality that must be allocated to one or another of those component subsystems, as well as demanding an understanding of the interfaces between them.

Business rules include corporate policies, government regulations, industry standards, and computational algorithms.

[They] are not themselves software requirements because they have an existence beyond the boundaries of any specific software application. However, they often dictate that the system must contain functionality to comply with the pertinent rules.

Non-functional requirements might specify not what the system does, but how well it does it.

Some people consider nonfunctional requirements to be synonymous with quality attributes, but design and implementation constraints are also considered nonfunctional requirements, as are external interface requirements.

Quality requirements should be specific, quantitative, and verifiable. It is recommended that the relative priorities of various such attributes, such as ease of use vs. ease of learning, or security vs. performance, is specified by the people who request the attributes in question.

You can deliver a product that has all the desired functionality but that users hate because it doesn’t match their (often unstated) quality expectations.

A feature consists of one or more logically related system capabilities that provide value to a user and are described by a set of functional requirements.

…

“The best way to tell whether your requirements possess these desired attributes is to have several stakeholders review them. Different stakeholders will spot different kinds of problems.” (p. 378)

…

Project requirements are different from product requirements. In this context, the above requirement components were product requirements. Project requirements include components like:

*Physical resources used by the development team (e.g. hardware), rooms, etc.

*Staff training needs

*User documentation, including training materials

*Support documentation

*Requirements and procedures for releasing the product, installing it in the operating environment, configuring it, and testing the installation. (Implementation plan)

*SLAs

*Intellectual property-related requirements

…

A template for stuff you may want to address:

Code Complete (I)

Here’s what I wrote about the book elsewhere not long ago: “I recently started reading the book Code Complete by Steve McConnell. I’ve only read the first 100 pages so far (Kindle estimate of time remaining: 27 hours… – then again, it is 960 pages..) but I can already confidently say at this point that if you’re a software developer or programmer or similar, or plan to be, then you’ll want to read this book – it’s awesome. (…and even just reading the first 50 pages of this book would probably make you a better programmer, even if you read no more than that…)”

I enjoy reading it and I learn something new on many of the pages here, or perhaps get a new angle on a topic I have familiarity with – I’ve already come across multiple important insights that I’d really wish I’d have known about when involved in projects in the past, and I’m trying to share some of these learnings also with my coworkers. It’s just a good book. The fact that it’s already almost 20 years old of course means that there isn’t a great deal of coverage about, say, the topics touched upon in the lecture below this post, but a lot of this stuff is about fundamentals, concepts, and tradeoffs, which means that this aspect actually matters probably significantly less than you’d think. Not all suggestions made are the sort of suggestions I feel tempted to immediately follow/implement in my daily work, but most of them at the very least makes you think a bit more about the choices you might be making, often subconsciously – and as the quotes below should incidentally serve to illustrate it’s not just a book about coding.

I have added some sample quotes from the chapters I’ve read so far below.

…

“Construction is a large part of software development. Depending on the size of the project, construction typically takes 30 to 80 percent of the total time spent on a project. […] Construction is the central activity in software development. Requirements and architecture are done before construction so that you can do construction effectively. System testing (in the strict sense of independent testing) is done after construction to verify that construction has been done correctly. […] With a focus on construction, the individual programmer’s productivity can improve enormously. A classic study by Sackman, Erikson, and Grant showed that the productivity of individual programmers varied by a factor of 10 to 20 during construction (1968). Since their study, their results have been confirmed by numerous other studies (Curtis 1981, Mills 1983, Curtis et al. 1986, Card 1987, Valett and McGarry 1989, DeMarco and Lister 1999, Boehm et al. 2000). This book helps all programmers learn techniques that are already used by the best programmers.”

“As much as 90 percent of the development effort on a typical software system comes after its initial release, with two-thirds being typical (Pigoski, 1997).”

“It generally doesn’t make sense to code things you can buy ready-made.”

“Choosing the right tool for each problem is one key to being an effective programmer.”

“Good architecture makes construction easy. Bad architecture makes construction almost impossible.”

“Good software architecture is largely machine- and language-independent.”

“Part of a programmer’s job is to educate bosses and coworkers about the software-development process, including the importance of adequate preparation before programming begins.”

“Both building construction and software construction benefit from appropriate levels of planning. If you build software in the wrong order, it’s hard to code, hard to test, and hard to debug. It can take longer to complete, or the project can fall apart because everyone’s work is too complex and therefore too confusing when it’s all combined. Careful planning doesn’t necessarily mean exhaustive planning or over-planning. You can plan out the structural supports and decide later whether to put in hardwood floors or carpeting, what color to paint the walls, what roofing material to use, and so on. A well-planned project improves your ability to change your mind later about details. The more experience you have with the kind of software you’re building, the more details you can take for granted. You just want to be sure that you plan enough so that lack of planning doesn’t create major problems later.”

“The overarching goal of preparation is risk reduction: a good project planner clears major risks out of the way as early as possible so that the bulk of the project can proceed as smoothly as possible. By far the most common project risks in software development are poor requirements and poor project planning […] You might think that all professional programmers know about the importance of preparation and check that the prerequisites have been satisfied before jumping into construction. Unfortunately, that isn’t so. A common cause of incomplete preparation is that the developers who are assigned to work on the upstream activities do not have the expertise to carry out their assignments. The skills needed to plan a project, create a compelling business case, develop comprehensive and accurate requirements, and create high-quality architectures are far from trivial, but most developers have not received training in how to perform these activities. […] Some programmers do know how to perform upstream activities, but they don’t prepare because they can’t resist the urge to begin coding as soon as possible. […] It takes only a few large programs to learn that you can avoid a lot of stress by planning ahead. Let your own experience be your guide. A final reason that programmers don’t prepare is that managers are notoriously unsympathetic to programmers who spend time on construction prerequisites.”

“One of the key ideas in effective programming is that preparation is important. It makes sense that before you start working on a big project, you should plan the project. Big projects require more planning; small projects require less. […] Researchers at Hewlett-Packard, IBM, Hughes Aircraft, TRW, and other organizations have found that purging an error by the beginning of construction allows rework to be done 10 to 100 times less expensively than when it’s done in the last part of the process, during system test or after release […]. In general, the principle is to find an error as close as possible to the time at which it was introduced. The longer the defect stays in the software food chain, the more damage it causes further down the chain. Since requirements are done first, requirements defects have the potential to be in the system longer and to be more expensive. Defects inserted into the software upstream also tend to have broader effects than those inserted further downstream. That also makes early defects more expensive. […] for example, an architecture defect that costs $1000 to fix when the architecture is being created can cost $15,000 to fix during system test. […] The cost to fix a defect rises dramatically as the time from when it’s introduced to when it’s detected increases. This remains true whether the project is highly sequential (doing 100 percent of requirements and design up front) or highly iterative (doing 5 percent of requirements and design up front). […] Dozens of companies have found that simply focusing on correcting defects earlier rather than later in a project can cut development costs and schedules by factors of two or more […]. This is a healthy incentive to find and fix your problems as early as you can.”

“Accommodating changes is one of the most challenging aspects of good program design. The goal is to isolate unstable areas so that the effect of a change will be limited to one routine, class, or package. […] Business rules tend to be the source of frequent software changes. […] Business systems projects tend to benefit from highly iterative approaches, in which planning, requirements, and architecture are interleaved with construction, system testing, and quality-assurance activities. […] Iterative approaches tend to reduce the impact of inadequate upstream work, but they don’t eliminate it. […] Iterative approaches are usually a better option for many reasons, but an iterative approach that ignores prerequisites can end up costing significantly more than a sequential project that pays close attention to prerequisites. […] One common rule of thumb is to plan to specify about 80 percent of the requirements up front, allocate time for additional requirements to be specified later, and then practice systematic change control to accept only the most valuable new requirements as the project progresses. Another alternative is to specify only the most important 20 percent of the requirements up front and plan to develop the rest of the software in small increments, specifying additional requirements and designs as you go. […] One key to successful construction is understanding the degree to which prerequisites have been completed and adjusting your approach accordingly […] The extent to which prerequisites need to be satisfied up front will vary with the project type […], project formality, technical environment, staff capabilities, and project business goals. […] Software being what it is, iterative approaches are useful much more often than sequential approaches are. […] Some projects do too much up front; they doggedly adhere to requirements and plans that have been invalidated by down-stream discoveries, and that can also impede progress during construction.”